Due to excessive homesickness for Wellington’s hills lately, I decided that it would be nice to visit the next best thing and go climb the local volcano – Rangitoto Island.

Approaching Rangitoto from Auckland CBD via ferry

Rangitoto makes up part of the Auckland Volcanic Field, erupting less than 600 years ago. and is clearly visible from Takapuna Beach, Mission Bay and various other locations.

This field is now considered dormant, but based on the size of Rangitoto, if any of the volcano in this field ever became active again, I’d be getting out of here as fast as I possibly can. (Although all Aucklanders would perish stuck in traffic trying to get out, so I’d probably use the precious moments left to hug my Linux server and tell it how much it means to me instead.)

Rangitoto is a particular interesting trip, not just because it’s a big volcano slapped alongside NZ’s largest city, but also for it’s impressive view, many walking trails, interesting human history (Maori, WW2, 20th century) and the fact that you can get extra walks and value by also visiting the neighboring island Motautapu which is connected and walkable.

I did only a day trip, but I’m seriously considering a several-days trip out to walk more of the island trails and to camp overnight in the designated camping grounds on Motautapu.

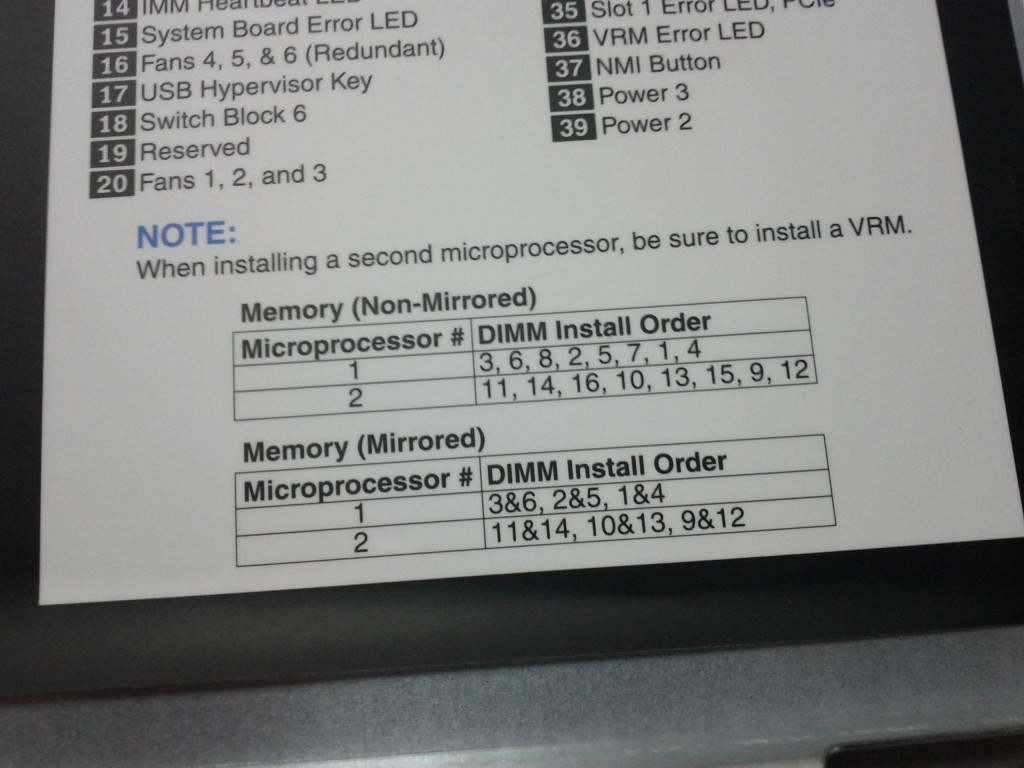

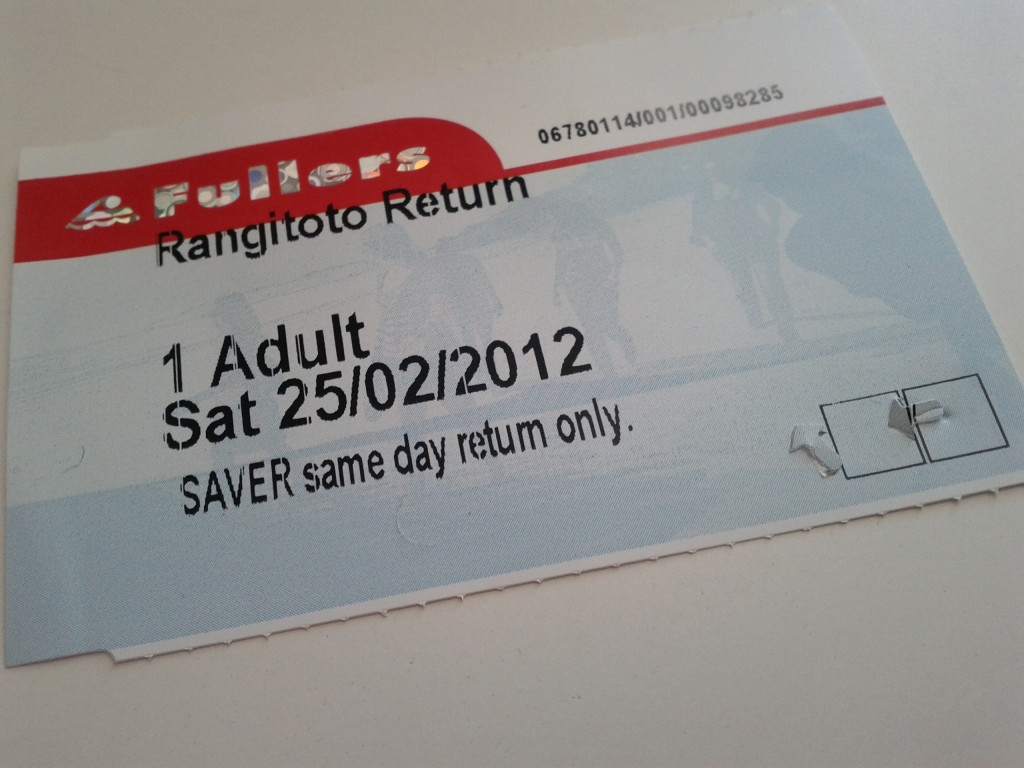

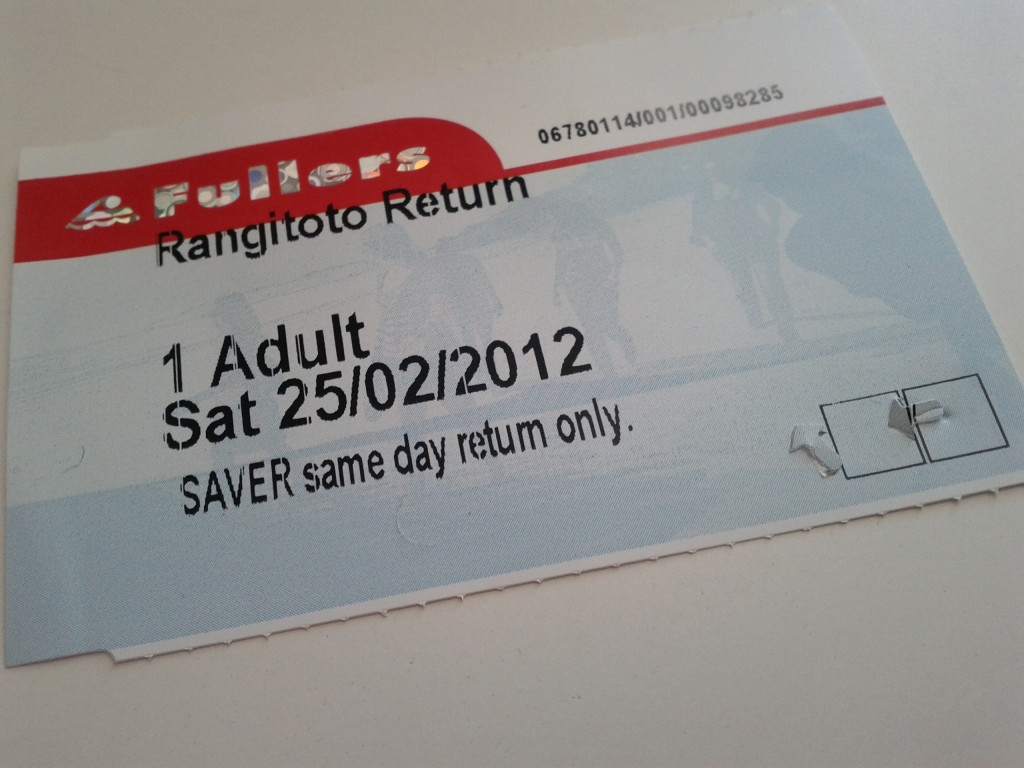

To get there, Fullers run a regular ferry service from Britomart & Devonport out to Rangitoto with several trips a day for $27 return, or $20 if you book online.

There’s a great map and guide which Fullers provides as a download or at the ticket office, if you’re planning a visit I recommend you grab it, just don’t trust the timetable 100% without checking the exact trip times for the particular day you’re visiting, as if you get it wrong, there’s no overnight accommodation and I think a private water taxi trip back to the CBD would be a bit pricey….

Sitting on the ferry, waiting to depart. Little dubious about the weather.

Cruising out of Auckland CBD, note the harbour bridge in the distance and the North Shore.

Taking the ferry from Britomart rather than Devonport offers some extra additional views of Auckland’s waterfront, including the cargo port which I didn’t manage to get pictures of sadly.

As a tip, even if you live on the North Shore, it’s often easier to bus into Auckland CBD and go from Britomart than it is from Devonport which remarkably always managed to have ridiculous amounts of suburban traffic congestion – I departed from Britomart and returned via Devonport, with the latter taking a good 30mins+ more to get home due to nose-to-tail traffic all the way to Takapuna!

Auckland CBD and cargo port.

Devonport Naval Base with HMNZS Canterbury in port.

Getting a good view of the Devonport Naval Base is pretty neat and offers something that you won’t see around Wellington’s harbor quite so much – when I went past, the HMNZS Canterbury was in port, sadly not out sinking whalers.

Pulling into Devonport Wharf

The William C Daldy Steam Tug at Devonport.

The trip to Rangitoto from Britomart takes around 25-45 mins depending on stopover time at Devenport to load/unload passengers.

Coming into the wharf at Rangitoto

Looking out from Rangitoto towards Auckland.

Rocks, Bush, Sea with a tint of human impact - you'll get a lot of this here.

Once on Rangitoto, the most noticeable trait is the rocks. The entire island is basically one giant pile of jagged rocks (after all, it was formed by a volcano) with plants growing whether they manage to take root. Often there’s weird patches of just rock with a single plant that has managed to grow in the middle.

The rocks themselves vary from being quite porous, to denser formations formed by lava and darker rock where lava came to hit the water. If there’s any geologists reading this blog, I’m sure they can comment far more accurately than I ever could about the different formations of rock.

A Geologist's Dreamland?

Pourous volcanic rocks litter the island, interestingly I didn't come across any pumice though.

Love the red soil, it's like being in aussie! ;-)

Dense lava flows - this track leads to the lava caves formed by flowing lava leaving a crust/shell which becomes a cave.

After disembarking the ferry I took the most direct path which pretty much climbs steadily up the mountain until reaching the top lookout. It started off pretty smoothly, but quickly became steeper and had me cursing my fitness, the temperature and the fact that I had to keep pushing as I didn’t want elderly ladies to beat me up.

It is possible to do Rangitoto by road-train tour (read trailers pulled by a tractor) which seemed popular with a number of tourists, families and elderly, but if you’re young/slightly fit, you’ll miss the whole point and many of the better paths on the island by taking it.

The other popular way to get around seems to be jogging – I’m not into running myself, but even I was starting to enjoy leaping from rock to rock towards the end of my day and it’s certainly a bit of a nicer spot for a ran than some random Auckland city road.

Once at the top, the view is pretty amazing and makes all the pain getting up the hill worthwhile.

Crater at the top of the island, just incase you forgot you were ontop of a giant exploding mountain.

Bow before Jethro the volcano conqueror, puny Aucklanders! (Looking out at CBD and the North Shore/Devonport)

Looking North-West out over the neighboring Motutapu Island

With a view like that, I had to give the new Android/ICS/4.0 panorama feature a go, but even this doesn’t do it justice. (look ma! I’m like a real professional-photo-taking-person!) ;-)

Panorama of Auckland looking south from the top of Rangitoto.

Looking over the North Shore region and a good view of the island below.

There’s a few things to look at up on the summit itself – it’s the home of an old WW2 observation post and there are a couple other ruins around as well if you do the crater loop walk track.

Does anyone actually know what these are? Summit markers?

I pity the poor suckers who had to lug this cement all the way up to build these bunkers....

The paths around the island vary a lot. There’s the typical standard dirt walking tracks, but you’ll sometimes have nice solid wooden walkways or wide rocky roads. Yet at other times, your path will be barely determinable piles of difficult to get across rocks.

What’s also extremely variable is how much the paths vary from being wide open places to being tight bush tracks, you can quickly go from one extreme to another.

I dare say my good sir, this path looks quite civilized, let us wander along and discuss our plans for high tea.

Wide open spaces - DOC workers use some of these roads with utes, if you're lucky a cute one rolls down the window and smiles at you instead of running you down. :-)

I call this path "The Ankle Breaker"

If you head down from the summit towards Islington Bay, you will have the opportunity to take the optional lava caves path (not that great unless you want to actually go into some caves) and also reach the causeway linking Rangitoto Island with the older and now meadow-covered Motutapu Island.

Causeway linking Rangitoto with Motutapu. Despite all their faults, the Americans did some pretty handy road building whilst in NZ during WW2.

The change in scenary between Rangitoto and Motutapu is startling, explained by the fact that Motutapu was here long before Rangitoto appeared and has no geographical links otherwise.

Looking out between the two islands.

Sadly I didn’t have time to get over to Motutapu Island, I arrived on the 09:15 ferry and departed on the 15:45 (last one of the day is 17:00) and I pretty much spent the entire time on the move.

Motutapu island has other WW2 sites, beaches and another main bay with a camping site that I would have liked to check out, but it would have been a 3 hour return trip to get there and back and I didn’t fancy gambling with the last ferry of the day home.

One thing to note about Rangitoto (and Motutapu for that matter) is that the timings on the Fuller’s map for the walkways are not to be ignored – I’m a damn fast walker, but I wasn’t able to do much more than 15% less than stated on the map at best of times. If it says 2 hours, it’s going to take 2 hours, don’t try to rush them.

Islington Bay by the Motutapu causeway is worth a visit, although serenity is a bit ruined when you have a party load of drinkers playing music in the bay – it’s a popular and accessible area for anyone with a boat.

There’s also the nearby Yankee Bay which has more ramps and could be a bit easier if you’re bringing a small dingy ashore.

Batches and boat ramps at Islington Bay. There are restored batches around the island.

Calmer, quieter waters.

Ruins of buildings long gone.

After visiting the bay, I took the coastal walk back to Rangitoto Wharf which is about 2 hours and far more rocky than I realized.

I evidently wasn’t the only one, some poor dude had decided to take the walk carrying a kayak, an airport-style luggage bag on wheels and several camping bags of supplies via this path rather than the much easier road that would have been 30mins shorter and far, far easier to shift everything on. He would have earned his sleep after arriving at camp that night!

Rocky coastal path - and a random power pole?!?!

The coastal path is mostly bush walking with the occasional open space and scenic sea view. I mostly took it since I wanted to see the mine depot, where sea mines were stored and deployed to protect Auckland Harbour during WW2, but sadly it had a closed trail so I was unable to visit or even get close to get a view of it. :-(

After the whole trip, I was pretty exhausted. Sadly I didn’t get a GPS map of my walking activities as I needed to conserve phone battery, but it would have been a good number of kilometers!

Your sexy, rugged, and always modest adventurer strutting the Intel Linux propaganda to all the outdoors fitness fans. :-P

It was good having 30mins or so after the walk to just sit and relax waiting for the ferry.

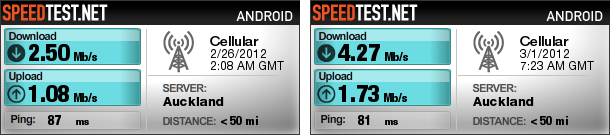

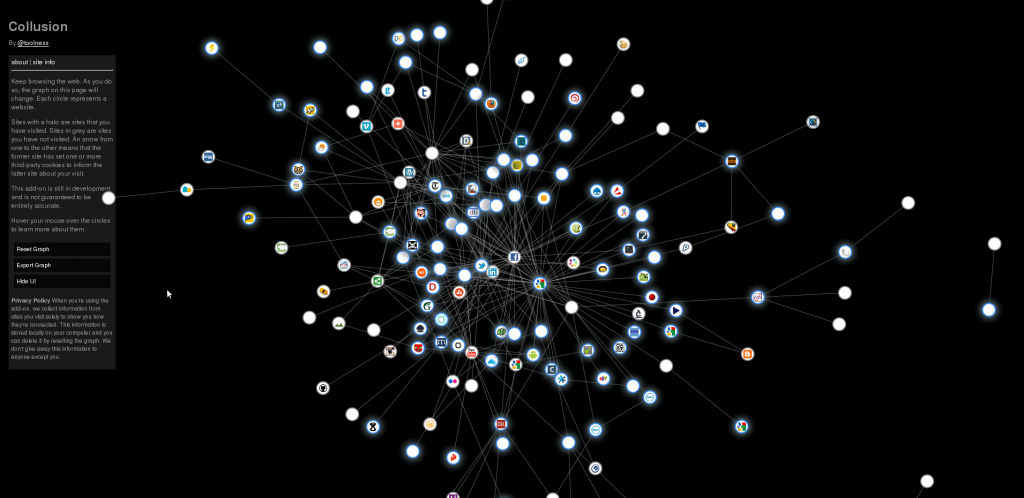

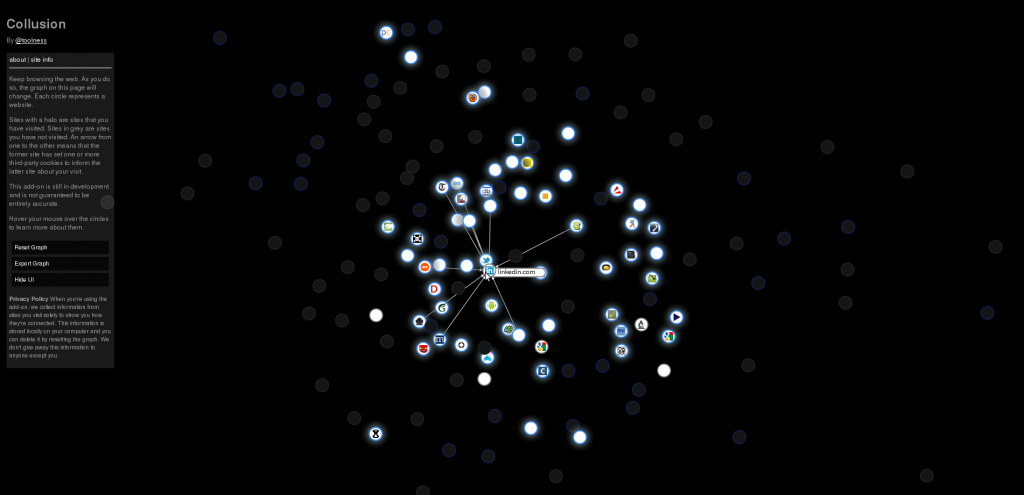

There is cellphone and functional data coverage from parts of Rangitoto – essentially any parts with line-of-sight to Auckland city – if you are addicted to Twitter, Facebook or any of these other hip social media 2.0 things you kids today love. :-P

My ride home after a long day <3

Disembarked at Devonport just as the rain starts.

If I managed to lose this during my walk, would they just leave me stranded on the island?

Overall it was a great tip with some amazing sights and walks and I’d certainly do it again at some point. Still many parts of the island I have yet to explore, bays with lighthouses, wrecks, quarries and of course everything on Motutapu to see and do.

I was fortunate in that I had an overcast day that, whilst almost at points, didn’t quite manage to rain, leaving me dry yet not too hot. I would avoid going on a blazing sunny day – when you get walking up hill or on the bush tracks it gets hot fast and the lava/rocks just love to reflect that heat back at you….

Take plenty of sunscreen (I’ve learned this the hard way), sunglasses, food and water. There is no fresh water on the island, I took and consumed around 2.4 liters of water during the 6 hours I was on the island (that’s 4 typical water bottles) and wouldn’t recommend any less for an adult.

You also want some kind of jacket as it can get cold when exposed and if it gets windy – most noticeable waiting for the ferry on the wharf, where several girls in very skimpy clothing shivered quite noticeably. And if it rains, there’s not always much shelter, so be prepared to get wet.

As always, NZ conditions can change quickly and with the length and remoteness of the trails on this island compared to inner city walks, you don’t want to be caught short.