It’s been almost 10 months since Lisa and I brought our current house and moved in. Things are going well, having our own place and not paying a landlord is a fantastic and freeing feeling, but home ownership certainly isn’t a free ride and the amount of work it generates is quite incredible.

So what’s been happening around Carr Manor since we moved in?

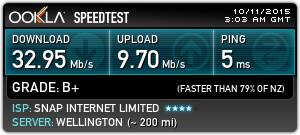

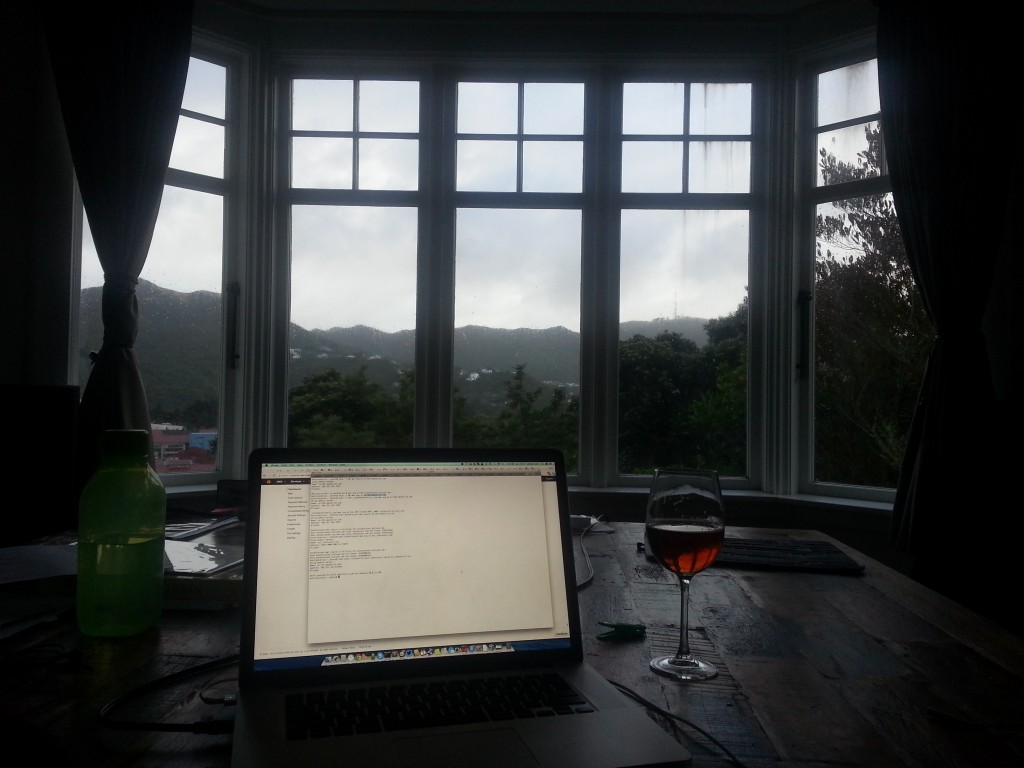

Can’t beat Wellington on a good day!

Generally the house is in good shape, most of my time has been spent in the grounds of the estate clearing paths, overgrown vegetation and various other missions. However we have had a couple smaller issues with the house itself.

The most serious one is that part of the iron roof in the house was leaking due to what looks like a number of different patch jobs combined with a nice unhealthy dose of rust.

Hmm cracks in the roof that let water in == bad right?

The outside doesn’t look a whole lot better.

The “proper” fix is that this section of roof needs replacing at some point as it’s technically well-past EOL, but roof replacement is expensive and a PITA, so I’ve fixed the issue by stripped off as much rust as I could and then re-sealing the roof using Mineral Brush-On Underbody Seal.

Incase you’re wondering, yes, the same stuff that you can use on cars. It’s basically liquid tar, completely waterproof and ever so wonderful at sealing leaky roofs. I liberally applied a few cans over flashings, patches and the iron itself getting a nice thick seal.

Repair!

The same stuff did wonders on the rusted shed roof flashing as well.

Up next I need to complete a repaint of both sheds and the house roof. I’m probably going to do a small job in whatever colour I have lying around for the worst part of the roof and then go over the whole roof again at a later stage when we decide on a colour for the full repaint.

The other issue we had was that one of the window hinges had rusted out leaving us with a window that wouldn’t open/close properly.

I’m not expert, but I don’t think hinges are supposed to look like this….

This was a tricky one to fix – the hinge and the screws were so rusted out I couldn’t even remove them, in the end I removed the window simply by tearing the hinge apart when I pulled on it leaving a shower of rust and more disturbingly, cockroaches that had been living amongst the bubbled rust.

This left me with two parts of metal hinge stuck in the wall and on the window frame held in by screws that would no longer turn – or in some cases, even lacked heads entirely.

To get them out, I put a very small drill bit into the electric drill and drilled out the screw right down the middle of it. It’s pretty straightforwards once you get it going, but it was a bit tricky to get started – I ended up using the smallest bit I had to make a pilot hole/groove in the screw head, and then upsized the bit to drill in through the screw. Once done, the metal remains tend to just fall out and come out with a little prodding.

I’ve since replaced it with a shiny new hinge and stainless steel screws which should last a lot longer than their predecessors.

Shiny new hardware

Painting has been an “interesting” learning experience, I’ve found it the hardest skill to pickup since it’s just so time consuming and you have to take such extreme care to avoid dripping any paint on other surfaces.

One of my earliest painting jobs was doing the lower gate. This gate spends a lot of time in the shade and even in spring was feeling damp and waterlogged and generally wasn’t looking that sharp – especially the fact the bolt was a pile of rust barely holding together.

I’m sure unfinished timber looks great when it’s first built, but the moss dirt and damp doesn’t lead to it aging well.

Much sharper!

Things like the gate take time and need care, but it’s nothing compared to the absolute frustration of painting window frames where a few mm to the wrong side or a stray bristle leads to paint being smeared across the glass.

I did the french doors initially as the paint had peeled and was starting to expose the timber to the elements, some of the putty had even fallen out and needed replacing.

Applying painter’s tape to this is one of the most frustrating things I’ve ever had to do :-/

Because I was painting around glass, I applied painter’s tape the whole thing before hand. It took hours, incredibly frustrating and I feel that the end result wasn’t particularly great.

I’ve since found that I can get a pretty tidy result using a sash/trim brush and taking extreme care not to bump the glass, but it is tricky and mistakes do happen. I’m figuring with enough practice I’ll get better at windows… and I have plenty of practice waiting for me with a full house paint job pending. Of course I could pay someone to do it, but at $15k+ for a re-paint, I’m pretty keen to see if I can tackle it myself….

The shed works haven’t proceeded much – I had the noble goal of completely repairing it over summer, but that time just varnished sorting out various other bits and pieces.

On the plus side, thanks to help from one of my colleagues, the shed has been dug out from it’s previously buried state and the rot and damage exposed – next step is to tear off the rotten weatherboards and doors and replace them with new ones, before repainting the whole shed.

A small 1meter retaining wall would have been more than enough to protect the shed, but instead the earth has ended up piled around it causing it to rot and collapse.

I also had help from dad and toppled the mid-size trees that were in-between the shed and the path. Not only were they blocking out light, but they were also going to be a clear issue to shed and path integrity in the future as they got bigger.

Much tidier! Just need to fix the shed itself now…

I’m still really keen to get this shed fixed so intend to make a start on measuring and sourcing the timber soon(ish) and maybe taking a few days off work to line up a block of time to really attack and fix it up.

A more pressing issue has been our pathways. We have two long 30-40meter concrete paths, a long ramped one (around 20-30 degree slope) up to the upper street and carpad and another zig-zag path with a mix of ramps and steps heading down to the lower street where the bus stop is.

Both paths are not in the best condition. The lower one requires a complete replacement, it’s probably around 80 years old and the non-reinforced concrete has cracked and shifted all over the place.

The upper one is more structurally intact, but has it’s own share of issues. The first most serious issue is that the steeper upmost end gets incredibly slippery in winter. It seems that although the concrete has been brush-finished whenever it rains, any grip it had just vanishes and it basically becomes a slide.

Jethro vs Autumn

Naturally slipping to a broken/leg/face/life isn’t ideal and we’ve been looking at options to fix it. We could convert the steepest bit from a ramp to steps, but steps have their own safety issues and we aren’t keen the lose the ramp as it’s the best way for getting large/heavy items to/from the house.

So a couple months ago I put down some Resene Non-slip Deck & Path which is a tough non-slippery paint product that basically includes a whole heap of sand which turns the smooth concrete path into something more like fine sandpaper.

We weren’t too sure about how good it would be, so we put down a 0.5l strip to test it out on the worst most part of the path.

A/B Testing IRL

It doesn’t feel that different to brushed concrete in the dry, but in the wet the difference is night & day and you really do feel a bit more attached to the path. We’ll still need to invest in a decent handrail and fence, but this goes a long way towards an elegant fix.

I’ve since brought another 10l and painted the upper portion, essentially all the “good” concrete we have. I thought that it might be too dark but actually it looks very sharp and once we put a new fence up (maybe white picket?) it will look very clean and tidy.

Old concrete, as good as new! :-)

The other ~30meters down to the house isn’t in such good shape, the surface is quite uneven in places and it’s missing chunks. We have a project to do to repair or replace the rest of it, once done the intention will be to paint the rest of the path in the same colour and it should look and feel great.

All this work requires a fair few tools, I’ve finally clean up the dining room where they had been accumulating and they’re now living properly in the shed.

Shed

One of the most interesting lessons I’ve had so far is that buying decent tools is often far cheaper than hiring tradies to do something for you – generally tools are cheap, even decent ones, but labour is incredibly expensive.

Why yes, that is a hardwood lamppost that I’m chainsawing.

The same thing applies to parts, it’s generally cheaper to just buy a new replacement of something than it is to fix it – I’m used to this from the IT world, but didn’t expect it from IRL.

In our cases, we had a shower mixer that decided to start letting a constant small stream of water through rather than shutting off properly.

Jethro vs Shower

Taking it apart and even removing it from the wall entirely isn’t too tricky, but I found after removing it all that the issue wasn’t anything trivial like needing a new o-ring and had to call out the plumbers.

Plumbers took it out, look at and it and are all “yeah that needs a new part”, so I ended up paying for the part + the labour – I’d have been better off just buying the whole new part myself and fitting it rather than trying to fix it.

Never underestimate the amount of waste you produce moving into a new place. I filled a skip with 1/3 concrete rubble, 1/3 polystyrene and 1/3 misc waste and there’s still another skip worth of debris around the property, possibly more once I tear all the rotten timber out of the shed.

Polystyrene is my number one enemy right now, almost everything we had shipped to the house when we moved in came with some and it’s crumbly and completely non-recyclable for good measure >:-(.

Where did all this junk come from?

Finally on the inside of the house things haven’t progressed much. Lisa has been working on the interior decor and accessories whilst I’ve done exciting things like overseeing the installation of insulation and fixing the loo in the laundry. :-/

Warming sheep fluff!

I hate plumbing!

I also had a whole bunch of fun with the locks – when we moved in I had the locksmith change the tumblers, but we’ve since found the locks were pretty worn out and the tail pieces inside started failing, so I had to buy whole new locks and fit them.

Turns out, whole new locks is way cheaper than getting the locksmith out to change the tumblers. If you’re moving into an older place, I’d recommend consider just getting new locks instead since the old ones probably aren’t much good either.

The only downside is that the sizing was slightly different, so I had to do some “creative woodwork” using a drill bit as a file (I didn’t have a file…. or the right size drill bit. A bit dodgy, but worked out OK).

It’s not just the IT world where the lack of standards means a bit of hackery to make stuff function.

Tidy job at the end of the day!

A lot of this work has been annoying in that it’s not directly visible as an improvement, but it’s all been important stuff that needed doing. I’m hoping to spend the next few months getting stuck into some of the bigger improvements like fixing the paths, sheds, etc which will be a lot more visible.

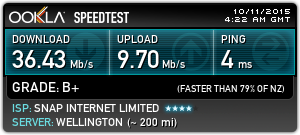

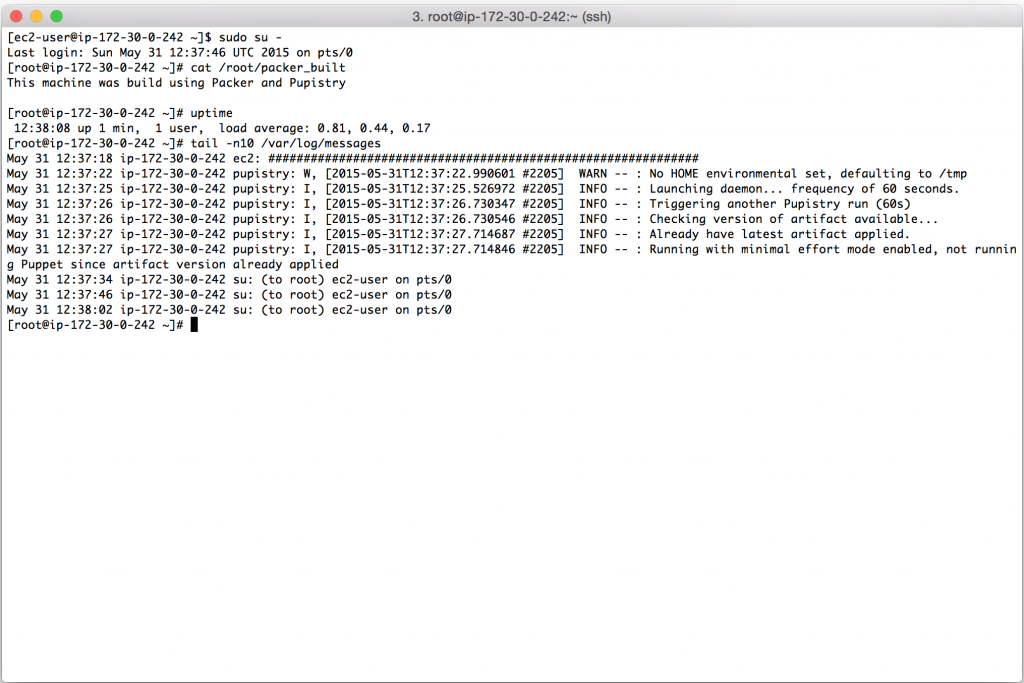

Until then, need to make more evenings to just sit back, relax and enjoy having our own place – feels like I’ve been just far too busy lately.

Beer time