I’ve been running Debian Stable on my laptop for about 10 months for a number of reasons, but in particular as a way of staying away from GNOME 3 for a while longer.

GNOME 3 is one of those divisive topics in the Linux community, people tend to either love it or hate it – for me personally I find the changes it’s introduced impact my workflow negatively, however if I was less of a power user or running Linux on a tablet, I can see the appeal of the way GNOME 3 is designed.

Since GNOME 3 was released, there have been a few new options that have arisen for users craving the more traditional desktop environment offered – two of the popular options are Cinnamon and MATE.

MATE is a fork of GNOME 2, so duplicates all the old libraries and applications, where as Cinnamon is an alternative GNOME Shell, which means that it uses the GNOME 3 libraries and applications.

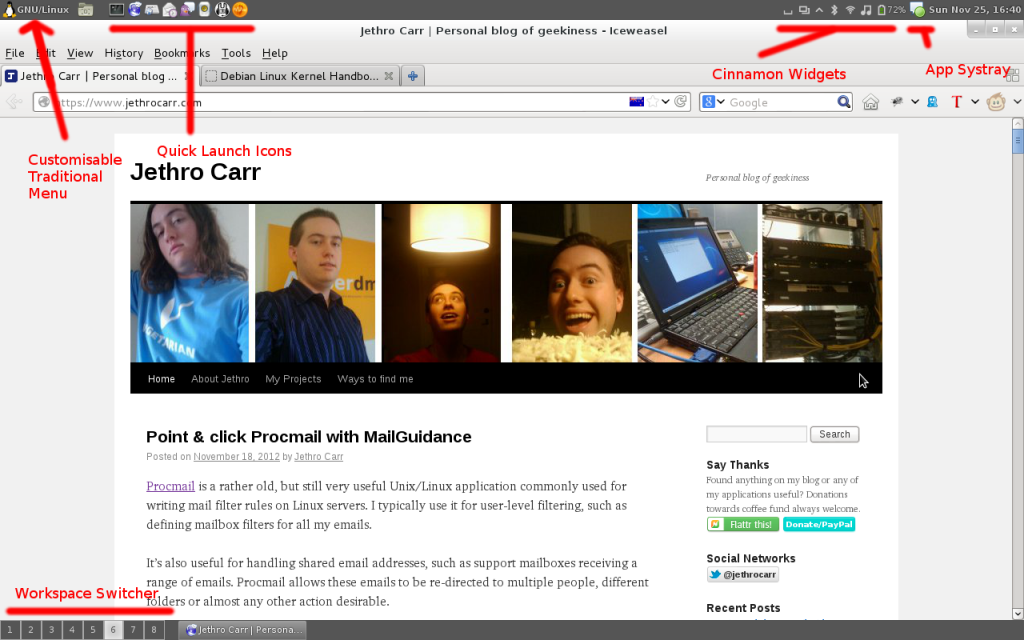

I’m actually a fan of a lot of the software made by the GNOME project, so I decided to go down the Cinnamon path as it would give me useful features from GNOME 3 such as the latest widgets for bluetooth, audio, power management and lock screens, whilst still providing the traditional window management and menus that I like.

As I was currently on Debian Stable, I upgraded to Debian Testing which provided the required GNOME 3 packages, and then installed Cinnamon from source – pretty easy since there’s only two packages and as they’ve already packaged for Debian, just a dpkg-buildpackage to get installable packages for my laptop.

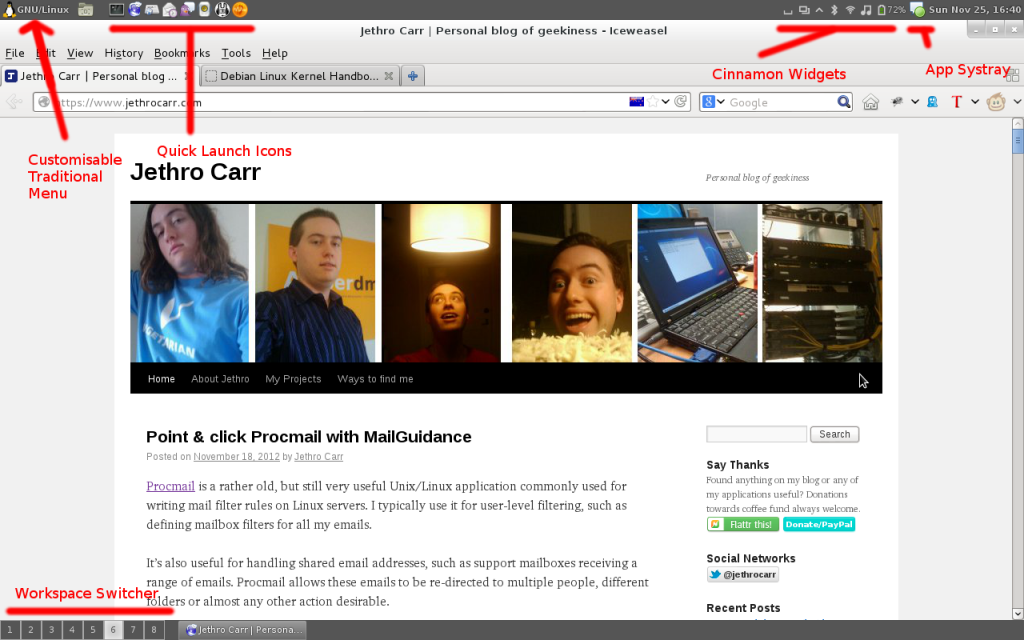

So far I’m pretty happy with it, I’m able to retain my top & bottom menu bar setup and all my favorite GNOME applets and tray features, but also take advantages of a few nice UI enhancements that Cinnamon has added.

All the traditional features we know and love.

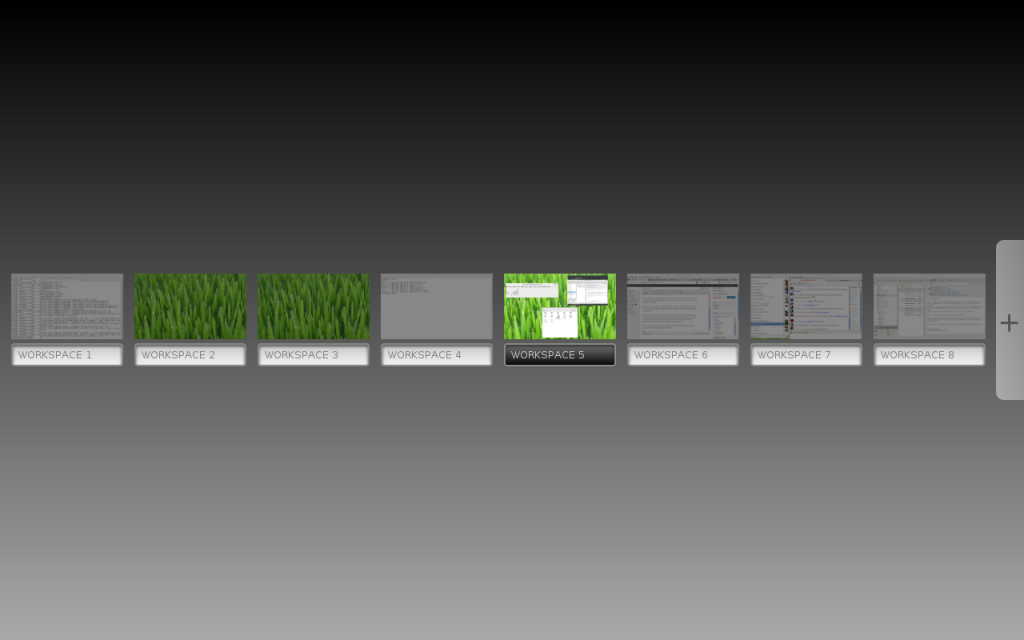

One of the most important features for me was a functional workspace system that allows me to setup my 8 different workspaces that I use for each task. Cinnamon *mostly* delivers on this – it correctly handles CTL+ALT+LEFT/RIGHT to switch between workspaces, it provides a taskbar workspace switcher applet and it lets me set whatever number of workspaces I want to have.

Unfortunately it does seem to have a bug/limitation where the workspace switcher doesn’t display mini icons showing what windows are open on which workspace, something I often use for going “which workspace did I open project blah on?”. I also found that I had to first add the 8 workspaces I wanted by using CTL+ALT+UP and clicking the + icon, otherwise it defaulted to the annoying dynamic “create more workspaces as you need them” behavior.

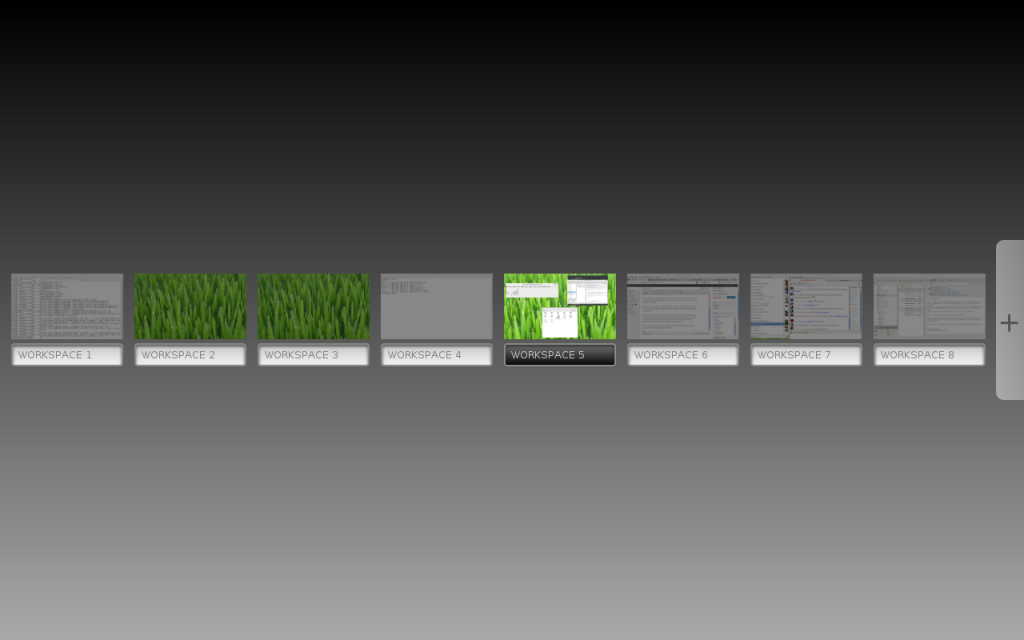

On the plus side, it does offer up a few shinier features such as the graphical workspace switcher that can be opened with CTL+ALT+UP and the window browser which can be opened with CTL+ATL+DOWN.

You can never have too many workspaces! If you’re similarly anal-retentive as me you can go and name each workspace as well.

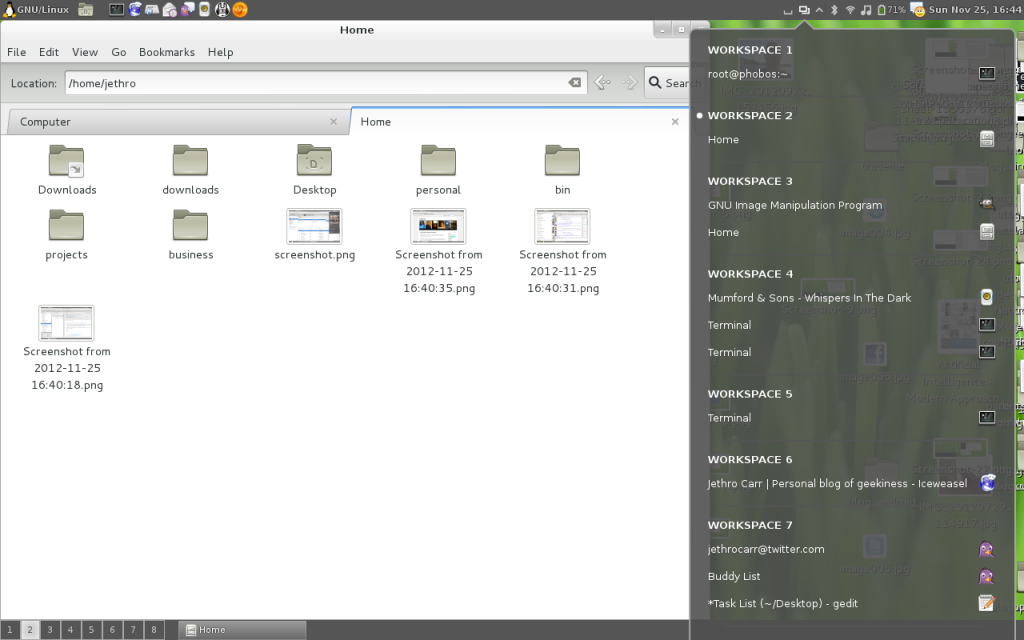

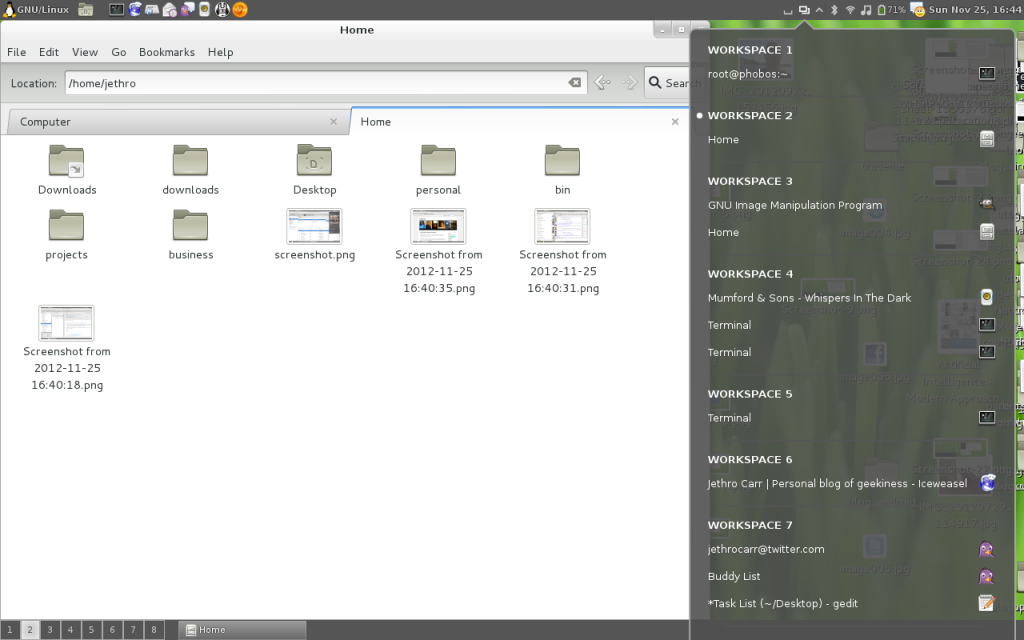

There’s also a few handy new applets that may appeal to some, such as the multi-workspace window list, allowing you to select any open window across any workspace.

Window applet dropdown, with Nautilus file manager off to the left.

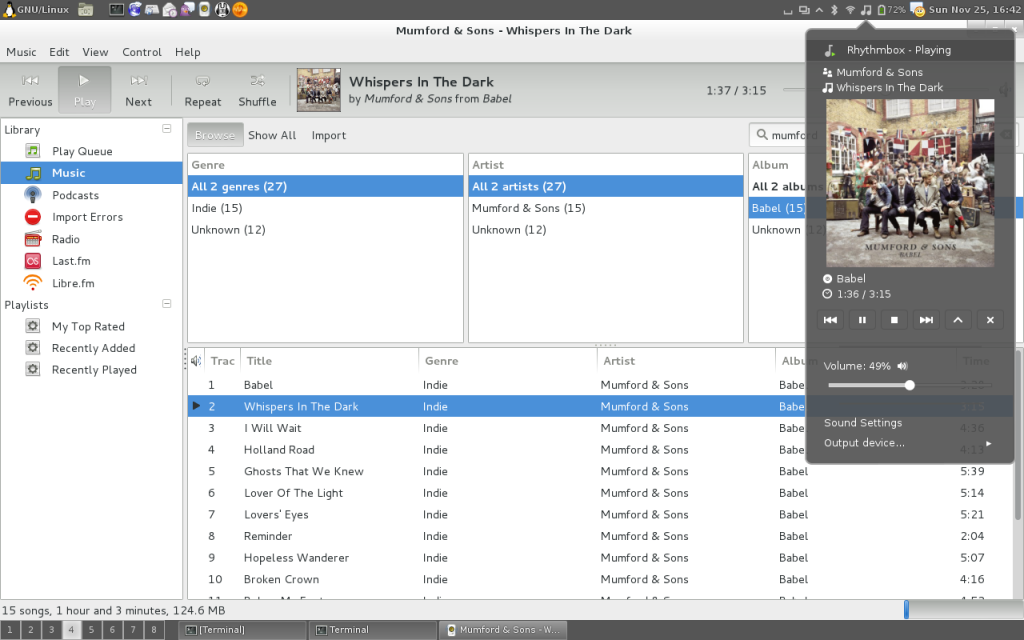

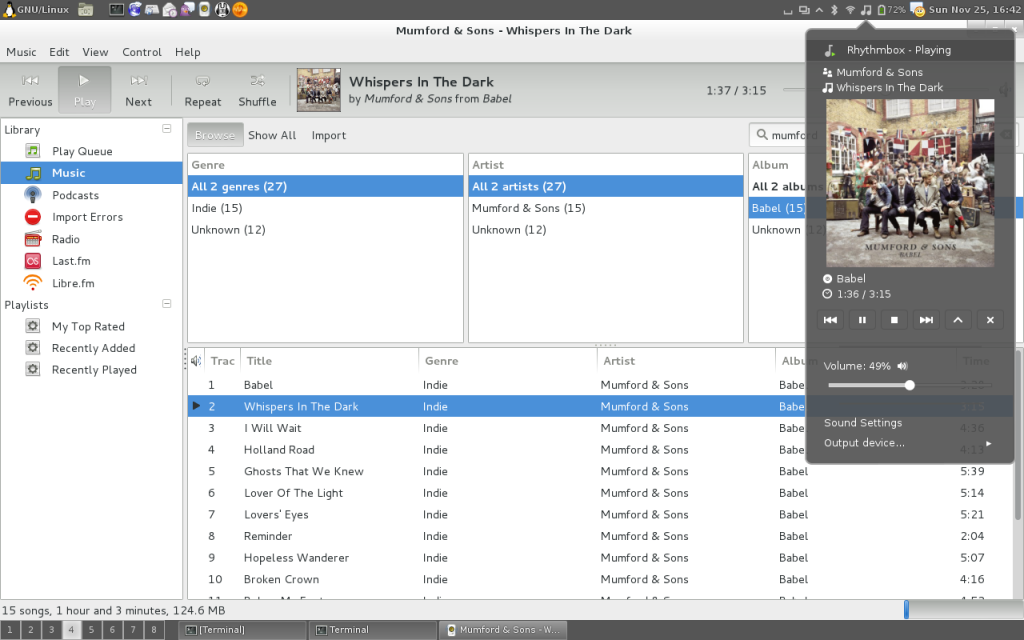

I use Rhythmbox for music playback – I’m not a huge fan of the application, mostly since it doesn’t cope well with playing content off network shares over WAN links, but it does have a nice simple UI and good integration into Cinnamon:

Break out the tweed jackets and moleskins, you can play your folk rock in glorious GTK-3 graphics.

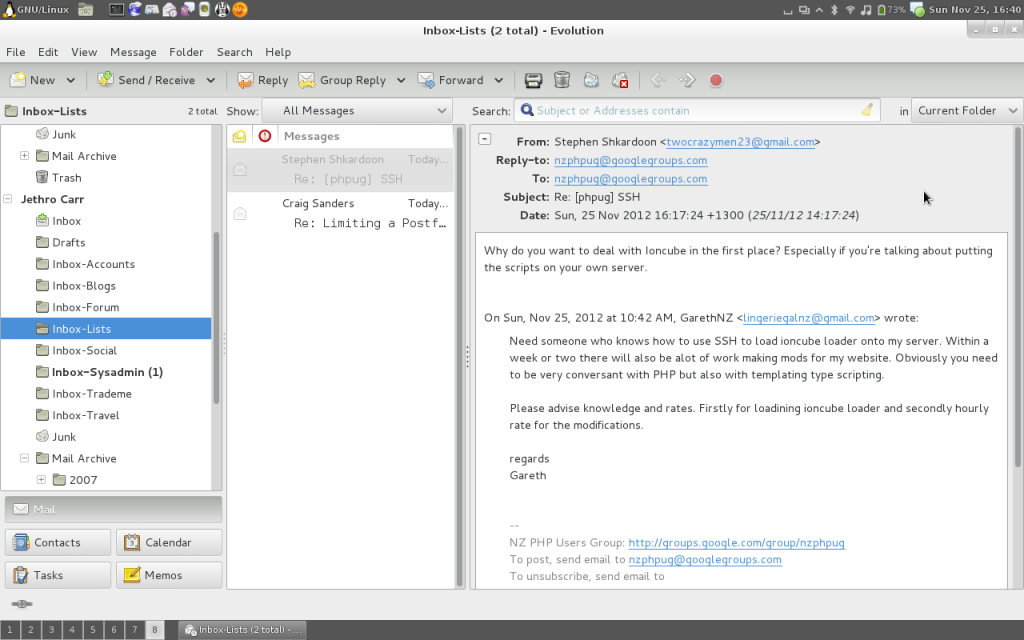

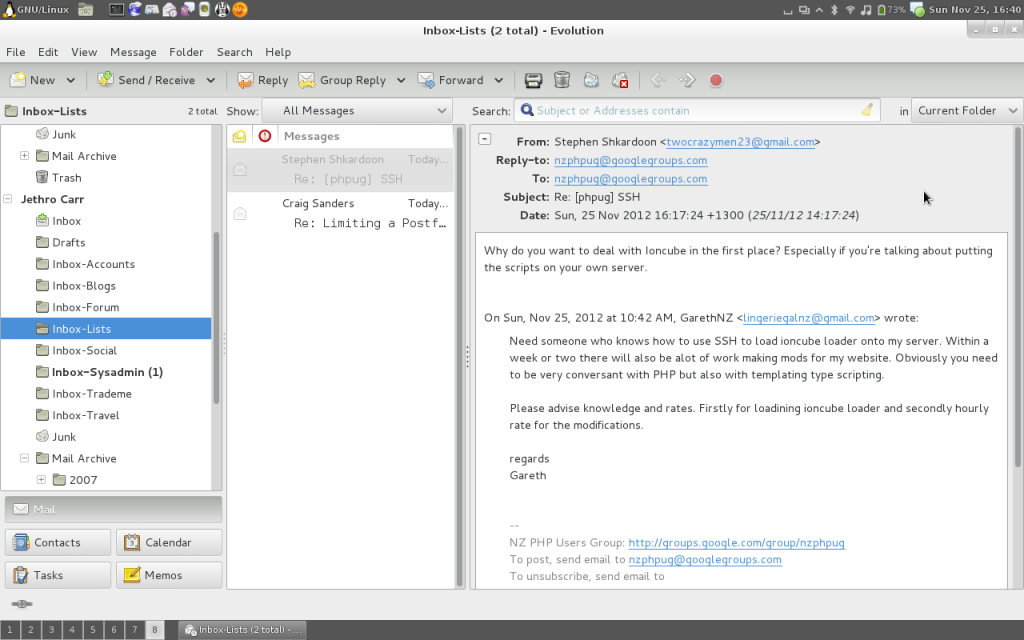

The standard Cinnamon theme is pretty decent, but I do find it has an overabundance of gray, something that is quite noticeable when using a window heavy application such as Evolution.

Didn’t you get the memo? Gray is in this year!

Of course there are a lot of other themes available so if the grayness gets to you, there are other options. You also have the usual options to change the window border styles, it’s something I might do personally since I’m finding that the chunky window headings are wasting a bit of my laptop’s very limited screen real estate.

Overall I’m pretty happy with Cinnamon and plan to keep using it for the foreseeable future on this laptop – if you’re unhappy with GNOME 3 and preferred the older environment, I recommend taking a look at it.

I’ve been using it on a laptop with a pretty basic Intel GPU (using i810 driver) and had no issue with any of the accelerated graphics, everything feels pretty snappy – there is also a 2D Cinnamon option at login if your system won’t do 3D under any circumstance.