A number of friends are always quite interested in how my personal IT infrastructure is put together, so I’m going to try and do one post a week ranging from physical environments, desktop, applications, server environments, monitoring and architecture.

Hopefully this is of interest to some readers – I’ll be upfront and advise that not everything is perfect in this setup, like any large environment there’s always ongoing upgrade projects, considering my environment is larger than some small ISPs it’s not surprising that there’s areas of poor design or legacy components, however I’ll try to be honest about these deficiencies and where I’m working to make improvements.

If you have questions or things you’d like to know my solution for, feel free to comment on any of the posts in this series. :-)

Today I’m examining my physical infrastructure, including my workstation and my servers.

After my move to Auckland, it’s changed a lot since last year and is now based around my laptop and gaming desktop primarily.

All the geekery, all the time

This is probably my most effective setup yet, the table was an excellent investment at about $100 off Trademe, with enough space for 2 workstations plus accessories in a really comfortable and accessible form factor.

My laptop is a Lenovo Thinkpad X201i, with an Intel Core i5 CPU, 8GB RAM, 120GB SSD and a 9-cell battery for long run time. It was running Fedora, but I recently shifted to Debian so I could upskill on the Debian variations some more, particularly around packaging.

I tend to dock it and use the external LCD mostly when at home, but it’s quite comfortable to use directly and I often do when out and about for work – I just find it’s easier to work on projects with the larger keyboard & screen so it usually lives on the dock when I’m coding.

This machine gets utterly hammered, I run this laptop 24×7, typically have to reboot about once every month or so, usually from issues resulting with a system crash from docking or suspend/resume – something I blame the crappy Lenovo BIOS for.

I have an older desktop running Windows XP for gaming, it’s a bit dated now with only a Core 2 Duo and 3GB RAM – kind of due for a replacement, but it still runs the games I want quite acceptably, so there’s been little pressure to replace – plus since I only really use it about once a week, it’s not high on my investment list compared to my laptop and servers.

Naturally, there are the IBM Model M keyboards for both systems, I love these keyboards more than anything (yes Lisa, more than anything <3 ) and I’m really going to be sad when I have to work in an office with other people again whom don’t share my love for loud clicky keyboards.

The desk is a bit messy ATM with several phones and routers lying about for some projects I’ve been working on, I’ll go through stages of extreme OCD tidiness to surrendering to the chaos… fundamentally I just have too much junk to go on it, so trying to downsize the amount of stuff I have. ;-)

Of course this is just my workstations – there’s a whole lot going on in the background with my two physical servers where the real stuff happens.

A couple years back, I had a lab with 2x 42U racks which I really miss. These days I’m running everything on two physical machines running Xen and KVM virtualisation for all services – it was just so expensive and difficult having the racks, I’d consider doing it again if I brought a house, but when renting it’s far better to be as mobile as possible.

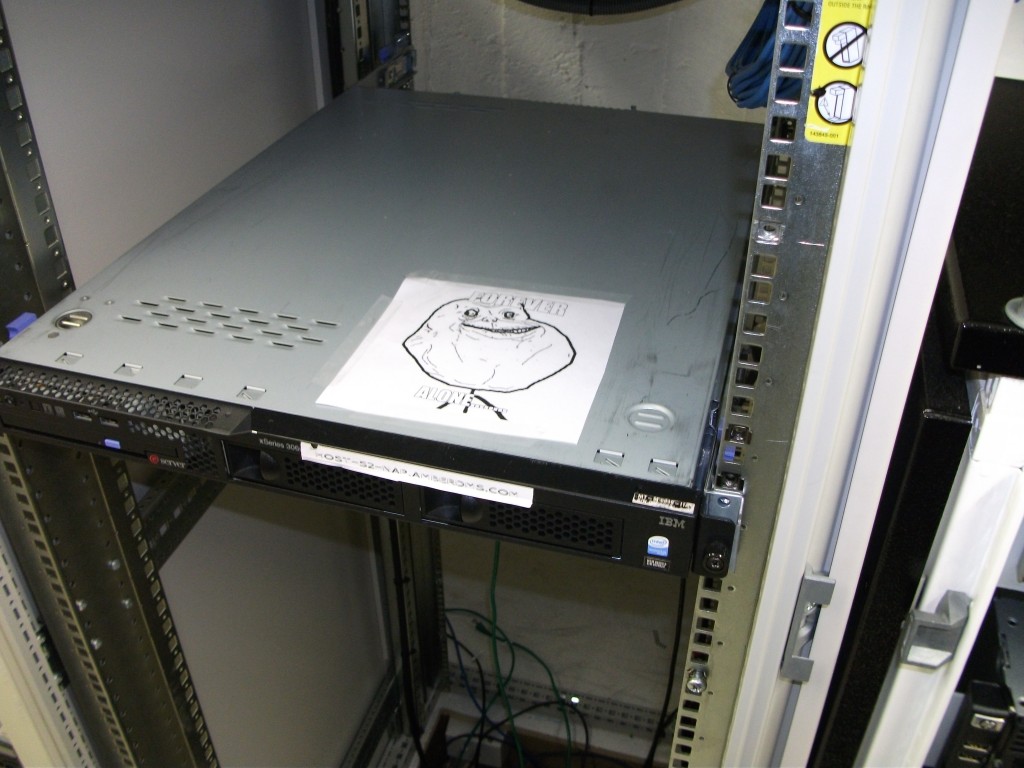

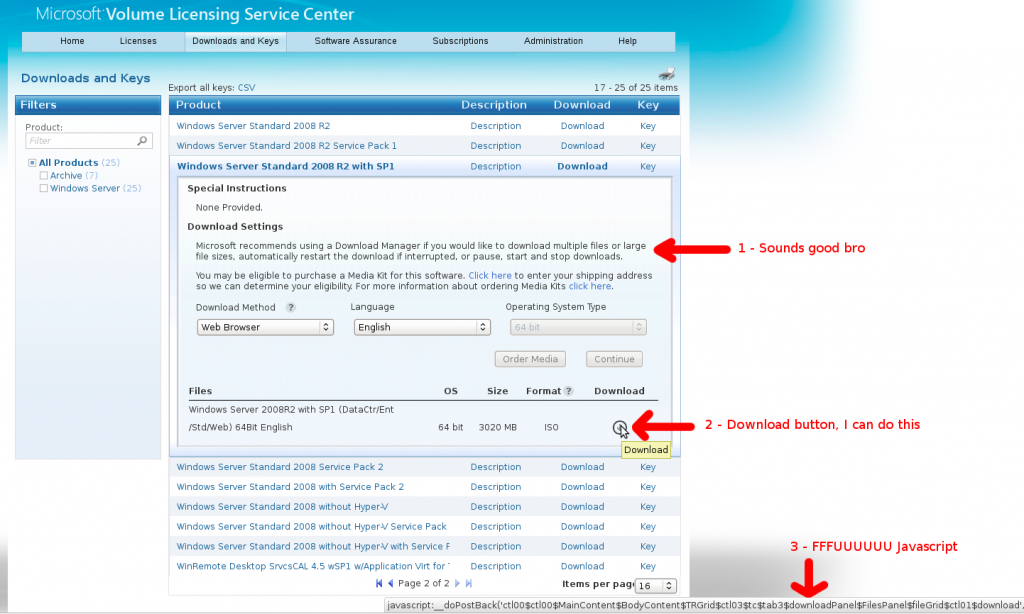

The primary server is my colocation box which runs in a New Zealand data center owned by my current employer:

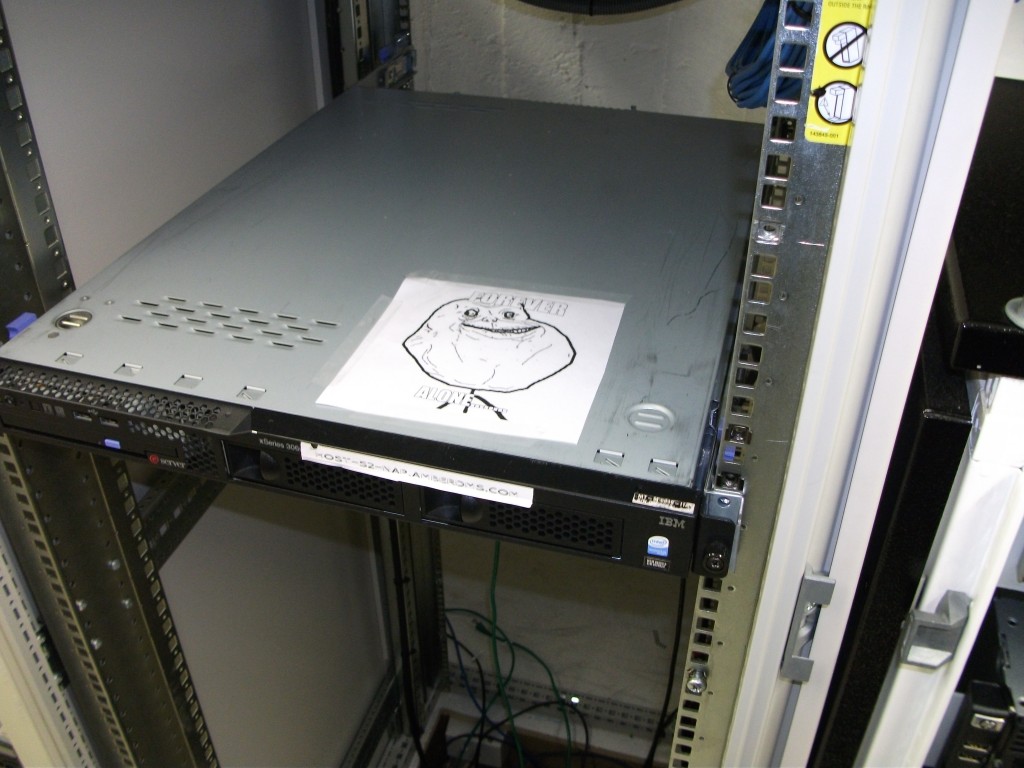

Forever Alone :'( [thanks to my colleagues for that]

It’s an IBM xseries 306m, with 3.0Ghz P4 CPU, 8GB of RAM and 2x 1TB enterprise grade SATA drives, running CentOS (RHEL clone). It’s not the fastest machine, but it’s more than speedy enough for running all my public-facing production facing services.

It’s a vendor box as it enabled me to have 3 yrs onsite NBD repair support for it, these days I have a complete hardware spare onsite since it’s too old to be supported by IBM any longer.

To provide security isolation and easier management, services are spread across a number of Xen virtual machines based on type and risk of attack, this machine runs around 8 virtual machines performing different publicly facing services including running my mail servers, web servers, VoIP, IM and more.

For anything not public-facing or critical production, there’s my secondary server, which is a “whitebox” custom build running a RHEL/CentOS/JethroHybrid with KVM for virtualisation, running from home.

Whilst I run this server 24×7, it’s not critical for daily life, so I’m able to shut it down for a day or so when moving house or internet providers and not lose my ability to function – having said that, an outage for more than a couple days does get annoying fast….

Mmmmmm my beautiful monolith

This attractive black monolith packs a quad core Phenom II CPU, custom cooler, 2x SATA controllers, 16GB RAM, 12x 1TB hard drives in full tower Lian Li case. (slightly out-of-date spec list)

I’m running RHEL with KVM on this server which allows me to run not just my internal production Linux servers, but also other platforms including Windows for development and testing purposes.

It exists to run a number of internal production services, file shares and all my development environment, including virtual Linux and Windows servers, virtual network appliances and other test systems.

These days it’s getting a bit loaded, I’m using about 1 CPU core for RAID and disk encryption and usually 2 cores for the regular VM operation, leaving about 1 core free for load fluctuations. At some point I’ll have to upgrade, in which case I’ll replace the M/B with a new one to take 32GB RAM and a hex-core processor (or maybe octo-core by then?).

To avoid nasty sudden poweroff issues, there’s an APC UPS keeping things running and a cheap LCD and ancient crappy PS/2 keyboard attached as a local console when needed.

It’s a pretty large full tower machine, so I except to be leaving it in NZ when I move overseas for a while as it’s just too hard to ship and try and move around with it – if I end up staying overseas for longer than originally planned, I may need to consider replacing both physical servers with a single colocated rackmount box to drop running costs and to solve the EOL status of the IBM xseries.

The little black box on the bookshelf with antennas is my Mikrotik Routerboard 493G, which provides wifi and wired networking for my flat, with a GigE connection into the server which does all the internet firewalling and routing.

Other than the Mikrotik, I don’t have much in the way of production networking equipment – all my other kit is purely development only and not always connected and a lot of the development kit I now run as VMs anyway.

Hopefully this is of some interest, I’ll aim to do one post a week about my infrastructure in different areas, so add to your RSS reader for future updates. :-)