It’s been a busy few weeks – straight after my visit to Christchurch I got stuck into the main migration phase of a new desktop and server deployment for one of our desktop customers.

It wasn’t a small bit of work, going from 20 independent 7-year old Windows XP desktops to new shiny Windows 7 desktops and moving from Scalix/Linux to Exchange/Win2008R2. It’s not the normal sort of project for me, usually I’ll be dealing with network systems and *nix servers, rather than Microsoft shops, but I had some free time and knew the customer site well so I ended up getting the project.

The deployment was mostly straightforwards, and I intended to blog about this in the near future, I honestly found some of the MS tech such as Active Directory quite nice and it’s interesting comparing the setup compared to what’s possible with the Linux environment.

However I still have no love for Microsoft Exchange, which has to be one of the most infuriating emails systems I’ve had to use. We ended up going with Exchange for this customer due to it working the easiest with their MS-centric environment and providing benefits such as ActiveSync for mobiles in future.

However with myself coming from a Linux background, having grown up with solid and easy to debug and monitor platforms like Sendmail, Postfix and Dovecot, Exchange is an exercise in obscure configuration and infuriating functionality.

To illustrate my point, I’m going to take you on a review of a fault we had with this new setup several days after switching over to the Exchange server…..

* * *

On one particular day, after several days of no problems, the Exchange server suddenly decided it didn’t want to email the upstream smarthost mail server.

The upstream server in question has both IPv4 and IPv6 addresses, something that you tend to want in the 21st century and it’s pretty rare that we have problems with it.

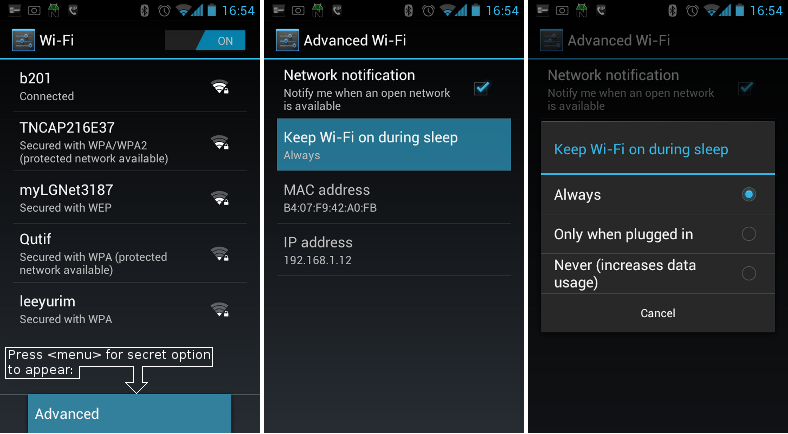

With Exchange 2010 and Windows Server 2008, both components have IPv6 enabled out-of-the-box – we don’t have IPv6 at this particular customer, since the ISP haven’t extended IPv6 beyond the core & colo networks, so we can’t allocate ranges to our customers using them at this stage.

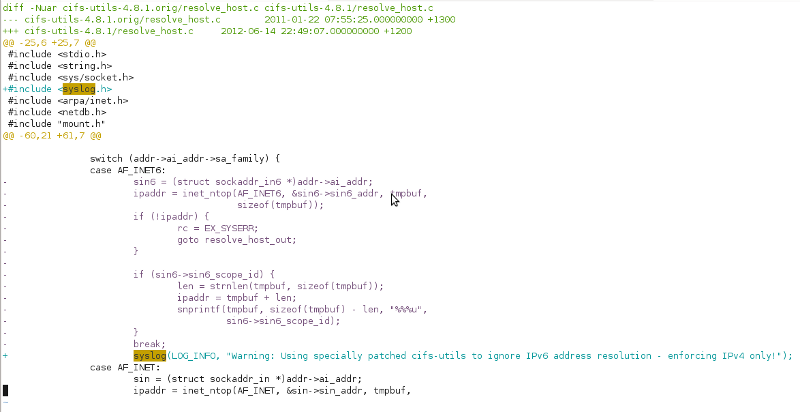

For some unknown reason, the Windows server decided that it would make sense to try connecting to the smart host via IPv6 AAAA record, despite there being no actual upstream IPv6 connection. To make matters worse, it then decided the next most logical thing was to just fail, rather than falling back to the IPv4 A record.

The Windows experts assigned to look at this issue, decided the best solution was to “disable IPv6 in Exchange”, something I assumed meant “tell Exchange not to use IPv6 for smarthosts”.

With the issue resolved, no faults occurring and emails flowing, the issue was checked off as sorted. :-)

Later that night, the server was rebooted to make some changes to the underlying KVM platform – however after rebooting, the Windows server didn’t come back up. Instead it was stuck for almost two hours at “Applying computer settings….” at boot – even once the login screen started, it would still take another 30mins before I could login.

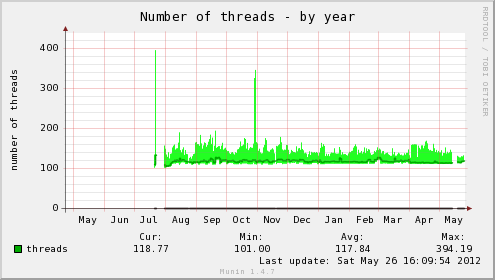

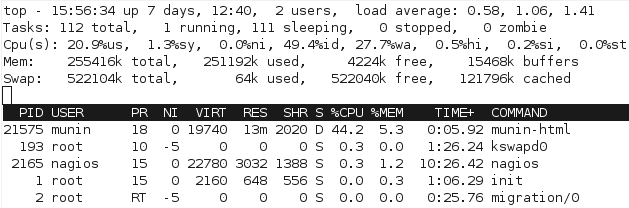

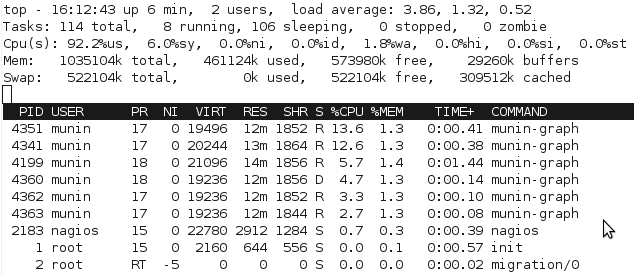

After eventually logging in, the server revealed the cause of the slow startup as being the fault of the “microsoft.exchange.search.exsearch.exe” process running non-stop at 100% CPU.

After killing off that process to get some resemblance of a responsive system, it became apparent that a number of key Exchange components were also not running.

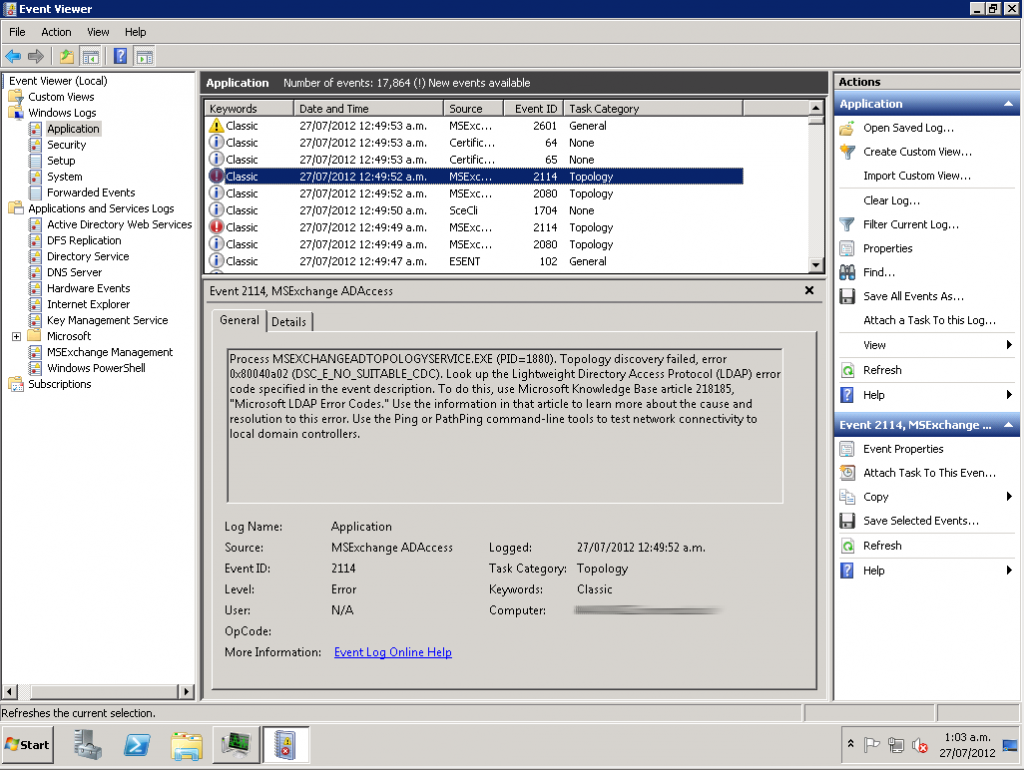

I waded through the maze that is event viewer, to find a number of Exchange errors, in particular one talking about being unable to connect to Active Directory LDAP, with an error of DSC_E_NO_SUITABLE_CDC (Error 0x80040a02, event 2114).

Naturally the first response was to review what changes had been made on the server recently. After confirming that no updates had been made in the last couple of days, the only recent change was the IPv6 adjustment made by the Windows engineers earlier in the day.

Reading up on IPv6 support and Windows Server 2008, I came across this gem on microsoft.com:

"From Microsoft's perspective, IPv6 is a mandatory part of the Windows operating system and it is enabled and included in standard Windows service and application testing during the operating system development process. Because Windows was designed specifically with IPv6 present, Microsoft does not perform any testing to determine the effects of disabling IPv6. If IPv6 is disabled on Windows Vista, Windows Server 2008, or later versions, some components will not function."

I then came across this blog post, from someone who had experienced the same error string, but with different cause. In his post, the author had a handy footnote:

"The biggest red herring I found when troubleshooting this one from articles others had posted was related to IPv6. I see quite a few people suggesting IPv6 is required for Exchange 2007 and 2010. This is NOT true. As a matter of fact, if the server hosting Exchange 2007 or 2010 is a DC, then IPv6 must be enabled otherwise simply uncheck the checkbox in TCP/IP properties on all connected interfaces. You don't need to buggar with the registry to "really disable it"....just uncheck the checkbox."

The customer’s Windows 2008 R2 server is responsible for both running Exchange 2010 as well as Active Directory

To resolve the smart host issues, the Windows team had disabled IPv6 altogether on the interface, resulting in a situation where Exchange was unable to establish a connection to AD to get information needed to startup and run.

To resolve, I simply enabled IPv6 for the server and the Exchange processes correctly started themselves within 10 seconds or so as I watched in the Services utility.

This resolved the “Exchange isn’t functioning at all issue”, but still left me with the smarthost IPv6 issue. To work around the issue for now, I just set the smarthost in Exchange to use the IPv4 address, but will need a better fix long term.

With the issue resolved, some post-incident considerations:

- I’m starting to see more cases where a *lack* of IPv6 is actually causing more problems than the presence of it, particularly around mail servers.

- Exchange has some major architectural issues – I would love to know why an internal communication issue caused the search indexer process to go nuts at 100% CPU for hours.I’ve broken Linux boxes in terrible ways before, particularly with LDAP server outages leaving boxes unable to get any user information – they just error out slowly with timeouts, they don’t go and start chewing up 100% CPU. And I can drop them into a lower run level to fix and reboot within minutes instead of hours.

- I did a search and couldn’t find any official Microsoft best practice documentation for server 2008, nor did Windows Server warn the admin that disabling IPv6 would break key services.

- If Microsoft has published anything like this, it’s certainly not easy to find – microsoft.com is a complete searching disaster. And yes, whilst they have a “best practice analyzer tool”, it’s not really want I want as an admin, I want a doc I can review and check plans against.

- I’m seriously tempted to start adding surcharges for providing support for Microsoft platforms. :-/

* * *

Overall, Exchange certainly hasn’t put itself in my good books, issues like the IPv6 requirement are understandable, but the side effect of the search indexer going nuts on CPU makes no sense and it’s pretty concerning that the code isn’t just “oh I can’t connect, I’ll close/sleep till later”.

So sorry Microsoft, but you won’t see me becoming a Windows Server fanboy at any stage – my Linux Sendmail/Dovecot setup might not have some of Exchange’s flashier features, but it’s damn reliable, extremely easy to debug and logs in a clear and logical fashion. I can trust it to operate in a logical fashion and that’s worth more to me than the features.