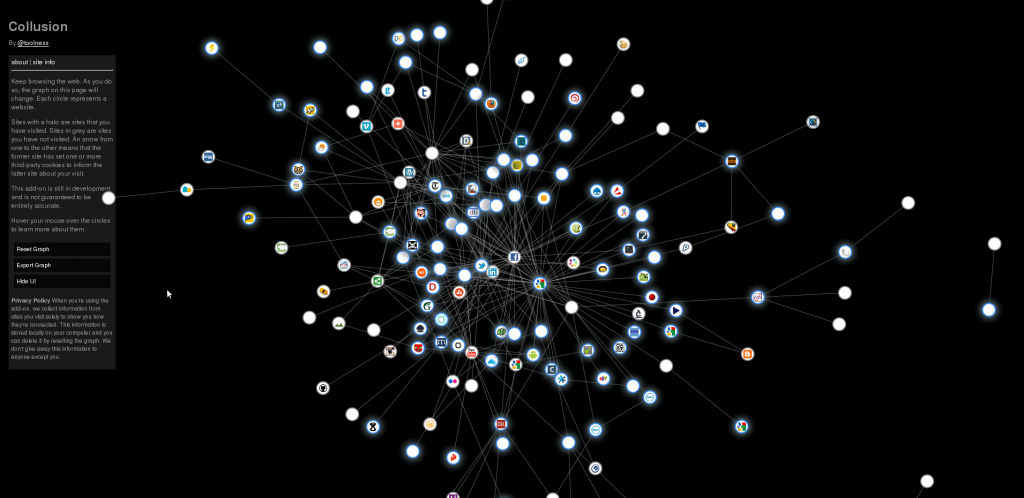

A reoccurring theme of this blog is that I love to be able to use open standards and open source for storing and accessing my information – biggest example is of course IMAP for email, but I also use tools such as Mozilla Sync Server for self-reliant synchronization and backup of client device information, without using external cloud providers.

I’ve been a user of Evolution for almost a decade now – sometimes criticized as the “Outlook of Linux”, Evolution provides mail, calendering, contacts and todo lists to the GNOME desktop, with a pretty large but sometimes slightly buggy feature set. For me personally, it’s always done a great job and it’s my key business productivity tool.

I moved all my mail onto an IMAP server years ago, which makes it easy to shift clients if I ever need to – in my case, pretty much just needing to access mail from both my laptop and smart phone.

However this hasn’t been the case for other key data such as calendering and contacts. A few years ago, the open source calendering solutions available weren’t that well developed, and many clients suffered limitations such as read-only functionality.

Thankfully this has been changing – most clients (*glares angrily at Microsoft Outlook*) now support CalDAV and CardDAV quite reliably, which gives us an open standard that works across different programs, platforms and device types.

- CalDAV is an open standard for the exchange of calendering and task/todo/memo information between a client and a server.

- CardDAV is an open standard for the exchange of contact/address book information between a client and a server.

These two standards have a number of implementations, both open source and proprietary, of note is Apple Calender Server, which is Apple’s open source implementation; and DAViCal, an open source LAMP based server solution that is becoming quite popular.

I’ve used both solutions – my employer runs an Apple Calender Server after getting fed up at not having free/busy between engineers. Whilst we ended up running a MacOS server, the Linux ports have improved and there are resources for setting it up on a Linux or even BSD host.

Apple Calender Server works reasonably well with Evolution, I never have any issue booking events, however Evolution appears unable to accept or deny meeting requests, forcing me to go to the calender server web-interface which is actually pretty horrific.

I decided I wouldn’t use it for my own personal calendering, even if I went to the effort for porting it onto my Linux servers, it wouldn’t really be the solution I ideally want as it lacks a lot of features and isn’t as easy to configure as other Linux services.

Instead I had a look at DAViCal. It’s a feature-packed calendering and contacts application developed primarily by Morphoss in Wellington NZ, started by Andrew McMillian of ex-Catalyst IT fame.

Despite having an annoyingly tricky name to type (you try typing it for the 100th time at 3am without typoing on the capitalization!!), the software itself appears reliable and worked across a number of devices when I ran tests.

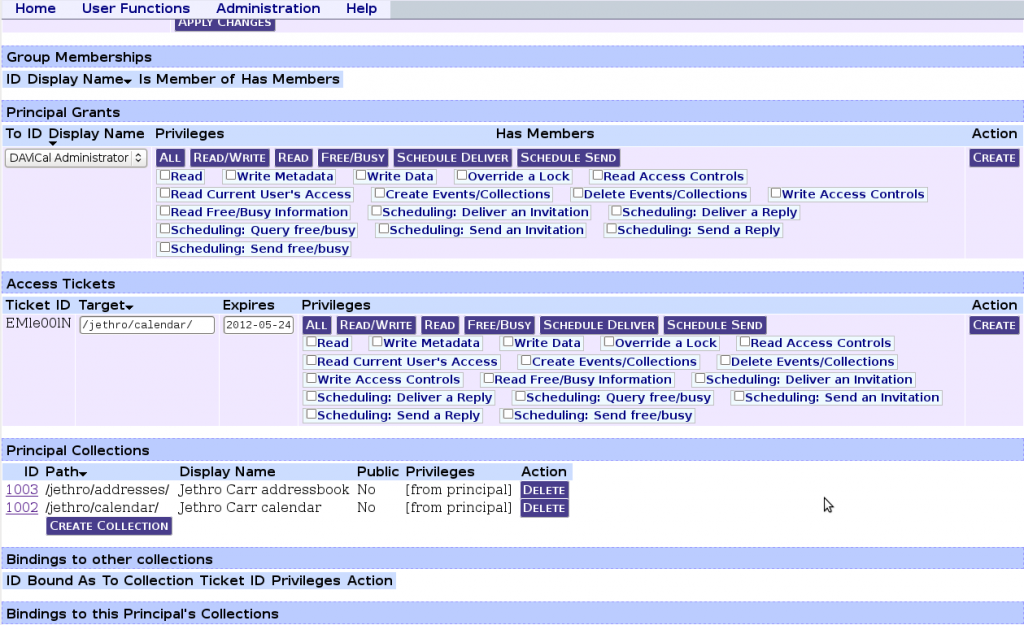

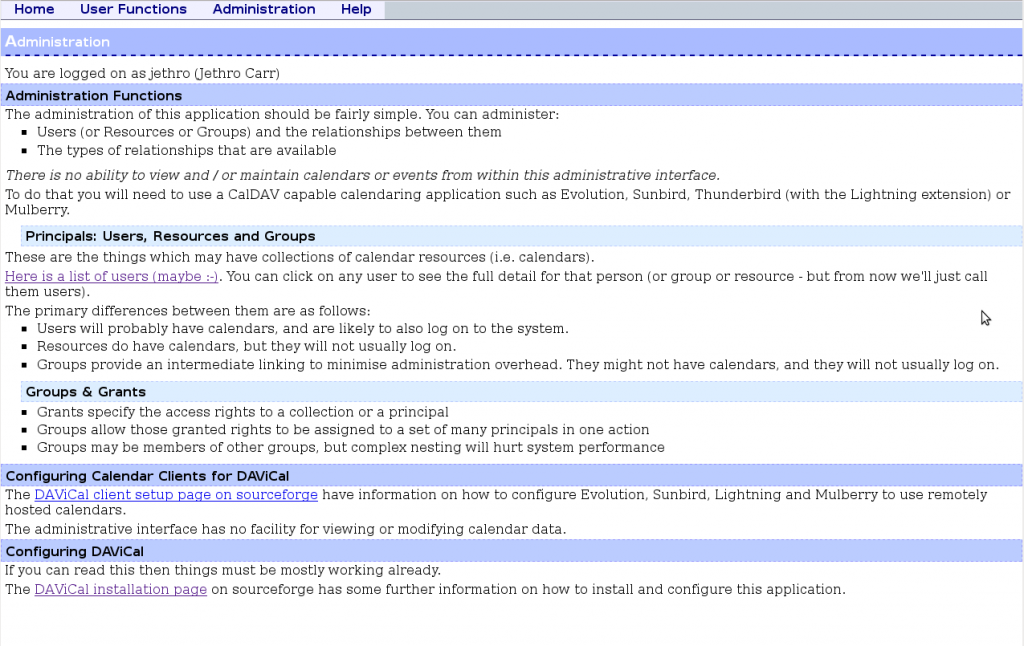

It’s not perfect, I have some issues with the user interface design, which very functional and effective, it’s not that intuitive to a new user, exposing far too many options to them at the beginning, ideally have a simple/advanced option so a user who just wants to add user calenders and do basic stuff can do so, then dig into more detailed ACLs, tokens, shared calenders, etc as needed.

Naturally it’s open source, so I should stop complaining and hack up some code to demonstrate what I think might be better. Maybe if people would stop stealing my car I’d have time to get something done. :-/

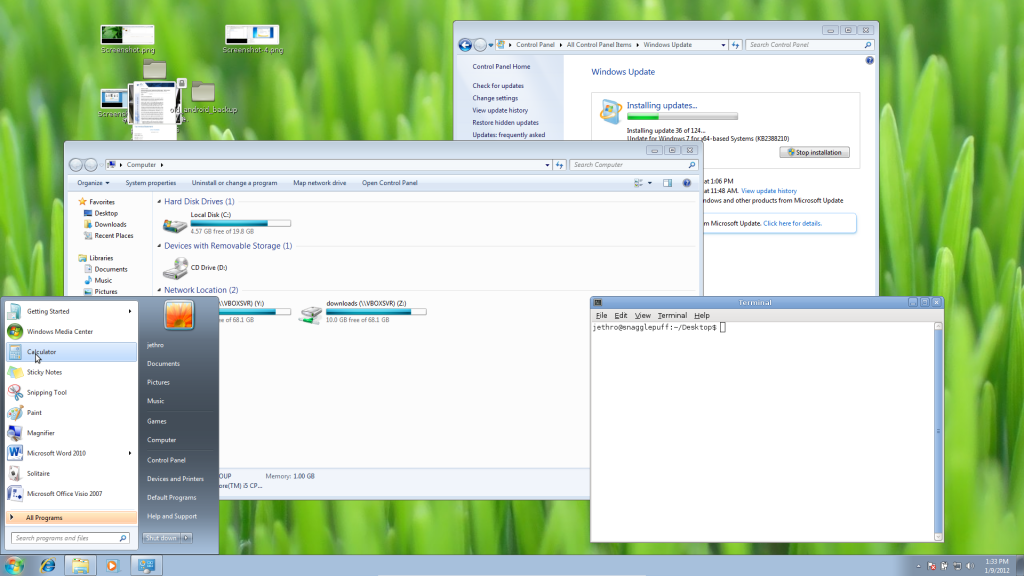

Main Screen Turn On! (Maybe some more 1-2-3 clear setup flows here would be nice, the wall of text is kind of offputting for visual people like myself).

The web-based interface is only for administration, there isn’t a web-based calender app provided with DAViCal, instead choose any CalDAV client you wish to use with it, whether it’s web based or client-side.

I haven’t given DAViCal’s feature set a full work out yet, at this stage I’ve just setup my personal calendar, contacts and todo list on both Evolution and my Android ICS phone but haven’t touched meeting requests, shared calenders and free/busy information.

Partly my testing is a bit limited since I’m only running Evolution 2.30.3 (Debian Stable) which is a little outdated and it looks like there’s some functionality missing/broken that might not be an issue any more.

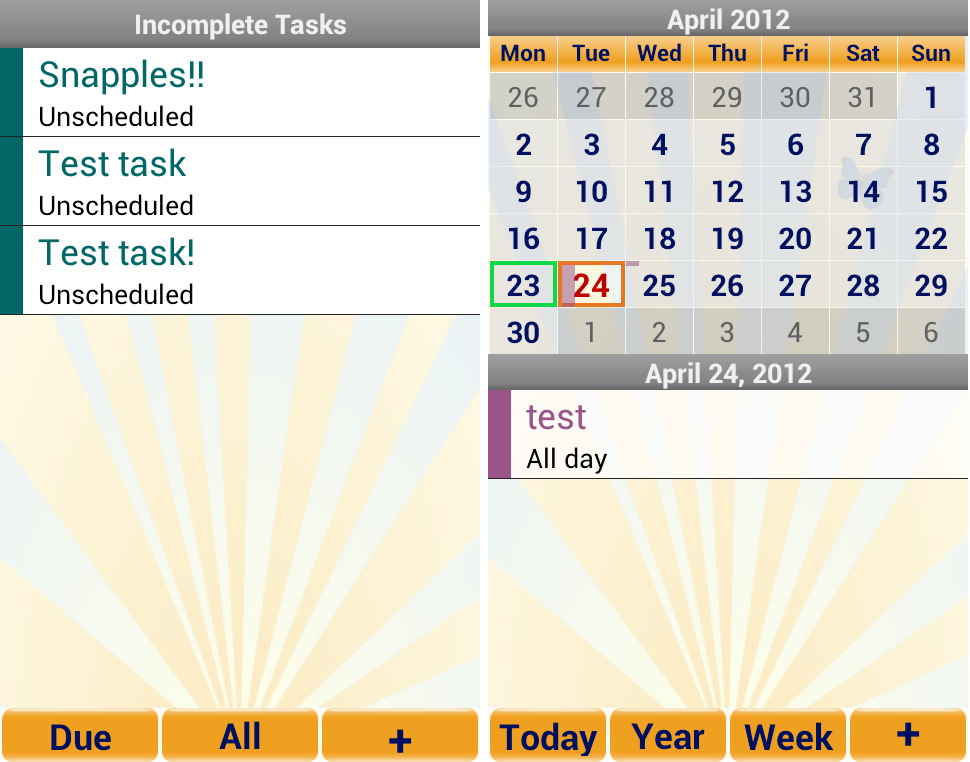

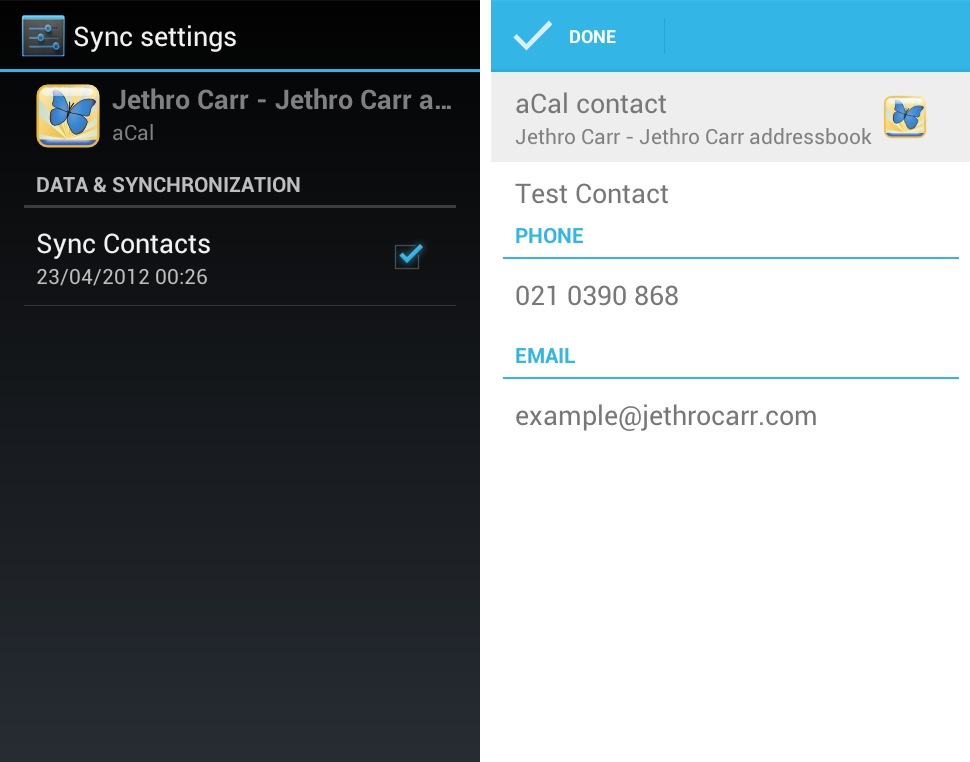

On the mobile side, I’m using “aCal”, an open source Android application written by the DAViCal developer, providing a CalDAV calender, todo list and read-only contact/address book synchronization.

This now means I can add, edit and delete calender and task entries on either my Android phone or Linux laptop via evolution and have it propagate to the other device – although unfortunately this is based on polling, rather than push (looks like push is possible theoretically via an extension to the standard, works with iCal).

I can also get read-only copies of all my contact information from Evolution synced through to my phone, but sadly there isn’t support for editing contacts on the Android phone just yet.

I did also consider using LDAP for my address book entries, but CardDAV looks like a better designed solution, it’s very rare that I don’t see “LDAP” and “headache” mentioned in the same sentence, and this comes from someone maintaining and supporting LDAP enterprise environments…

Essentially the main problem with LDAP, is that there isn’t an exact standard for address entries, so what works for one client, might not work 100% for another, along with limited selection of decent applications for actually managing LDAP address databases.

Also some clients treat LDAP assuming it’s going to be a million+ record store and expose different UI compared to that of smaller address books which harm user experience (*glares at Evolution*).

aCal & Android ICS address book integration - note the uneditable edit screen on the right, read-only for now :-(

The other main issue with aCal is that it doesn’t sync with the native OS calender program, but instead provides it’s own. Digging through the documentation and mailing list, this appears to be due to the native application lacking support for some of the functionality needed for a proper CalDAV implementation, so a sync solution would leave certain features missing, although I’d still like the option.

Of course these are limitations of aCal, not DAViCal or the standards themselves – there are some other CalDav & CardDAV sync programs available in the Android market under non-open licenses, which you have the option of trying.

The nice thing about using standards is that you can have multiple vendors competing to make the best product/tool for their customer’s needs, not simply using lock-in to maintain/force a customer base. :-)

Overall DAViCal seems really nice and in my testing has been quite reliable – I’m now moving on to more rigorous testing and am in the process of migrating my calender and contacts information into it, once I start using it daily in the real world the true testing begins.

Keen to take a look at what options I have around exposing some information publicly, eg sharing schedule free/busy with friends on different servers.