I run a large GNU/Linux server with KVM for running numerous virtual machine guests, including build hosts used to package and compile software for different GNU/Linux distributions and other operating systems.

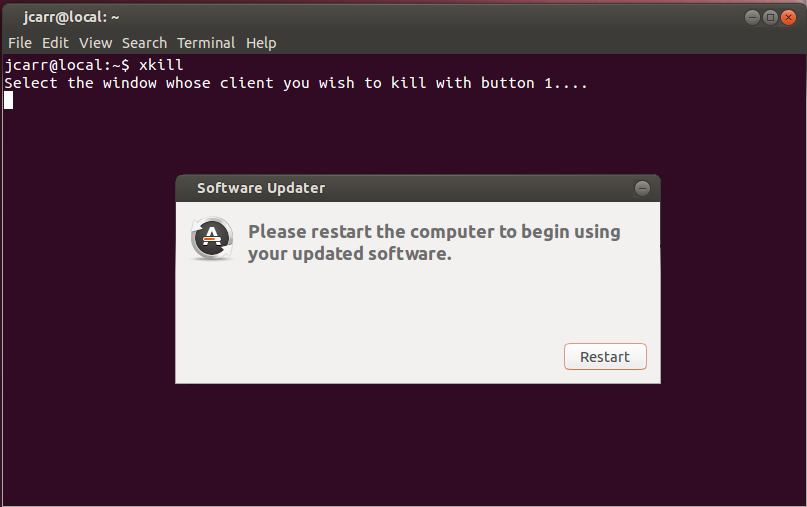

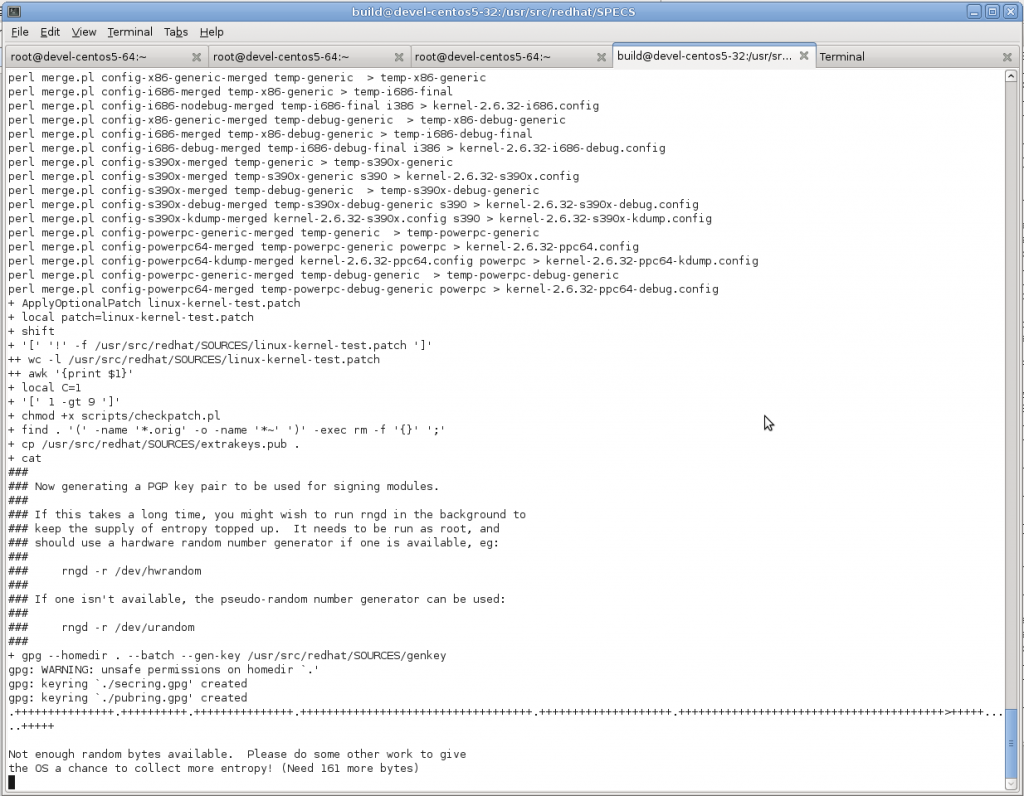

I recently ran into an issue during a kernel compile where the kernel compile hung indefinitely whilst GPG (tried) to sign kernel modules as part of the build process, due to the virtual machine guest running out of available entropy and being unable to proceed until more random data was available.

Bro, I’m stalled as bro!

On Linux there are two sources of random data – /dev/random, which provides high quality random data and /dev/urandom which provides an unlimited amount of pseudo-random data based on a seed value taken from the random pool initially.

Linux generates this random data by collecting entropy from somewhat-random events, such as disk activity, network activity, keyboard, mouse and other sources. When the pool of entropy is exhausted, /dev/random will block (ie force processes to freeze) until more is available, whereas /dev/urandom will continue to serve continuous pseudo-random data, although the quality of the random data is not considered as secure as /dev/random.

On a workstation or single server this tends to be enough to generate sufficient random data for most applications (although if you’re doing certain tasks you may still have an issue). Virtual machines on the other hand, lack hardware sources of entropy such as disks or keyboards and it’s very easy to quickly exhaust the available entropy pool and have some applications block until more is available.

Applications like Apache (with mod_ssl) and OpenSSL use /dev/urandom so aren’t impacted by shortages of entropy, but some signing processes, such as GPG require /dev/random and can be impacted if the source of entropy is exhausted – which is exactly what happened to my kernel signing process.

It’s pretty easy to use to test and see how quickly a Linux system re-fills the entropy pool by running a test to read data from /dev/random, forcing the pool to empty and be repopulated.

# dd if=/dev/random of=/dev/null count=1000

0+1000 records in

16+1 records out

8496 bytes (8.5 kB) copied, 149.849 s, 0.1 kB/s

The host doing this test has around 12 physical hard disks, 10 active KVM virtual machines spewing out packets, an unfiltered WAN link feeding random junk – all which is good for generating a decent amount of entropy. The numbers may look pretty bad, but when compared with the amount of entropy generated by my laptop…

# dd if=/dev/random of=/dev/null count=1000

0+1000 records in

16+1 records out

8409 bytes (8.4 kB) copied, 1389.95 s, 0.0 kB/s

The rate of entropy generation on my laptop is quite depressing – but at least my laptop has a keyboard, mouse and hardware environmental values to help add sometime to the entropy sources.

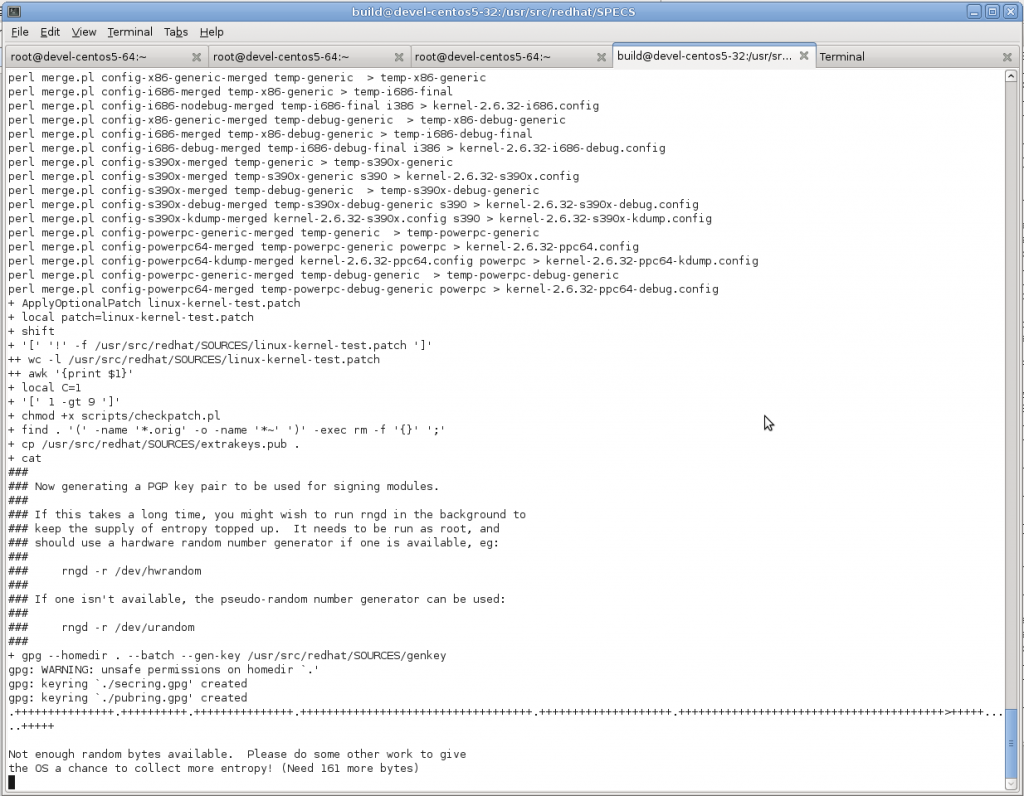

When I run the same test on a virtual machine guest, which lacks all these physical sources, it comes to a grinding halt:

# dd if=/dev/random of=/dev/null count=10000

0+24 records in

0+0 records out

0 bytes (0 B) copied, 1865.68 s, 0.0 kB/s

I was forced to kill the above test due to it timing out indefinitely thanks to the host running out of any available entropy and being unable to generate any more to complete the test. :-(

Even when performing an intensive activity such as compiling a large software library, it still takes considerable time to complete this test on a VM:

# dd if=/dev/random of=/dev/null count=1000

0+1000 records in

15+1 records out

8018 bytes (8.0 kB) copied, 2560.36 s, 0.0 kB/s

It seems that the lack of the random data generated by active physical hardware is too much for the VM guest to be able to complete the test. And whilst some applications like an HTTPS website would continue to operate fine, others like a build host GPG-signing packages may fail and hang indefinitely, unable to obtain the required volume of random data to complete it’s key generation process.

For times when this lack of entropy becomes an issue for your applications, it is possible to obtain additional entropy from a hardware random number generator – this can be as simple as using a feed such as analog noise from the sound card or as sophisticated as a hardware random number generator or functionality built into certain CPUs which is designed to be extremely random and unpredictable.

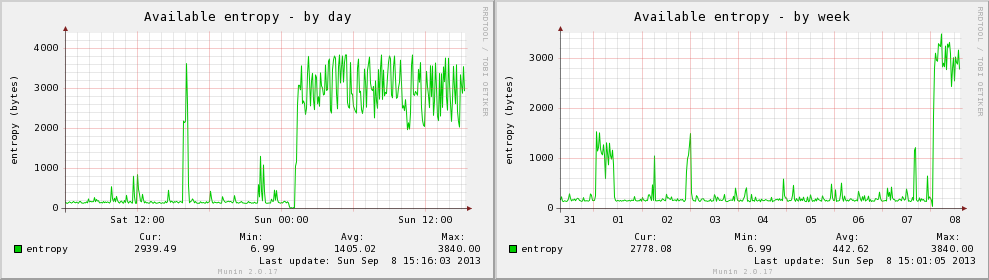

A while ago I picked up a pair of Simtec Electronic’s Entropy Keys, a small USB device which generates truly random sources of data by a clever method of abusing semiconductors and connected one to my primary KVM servers.

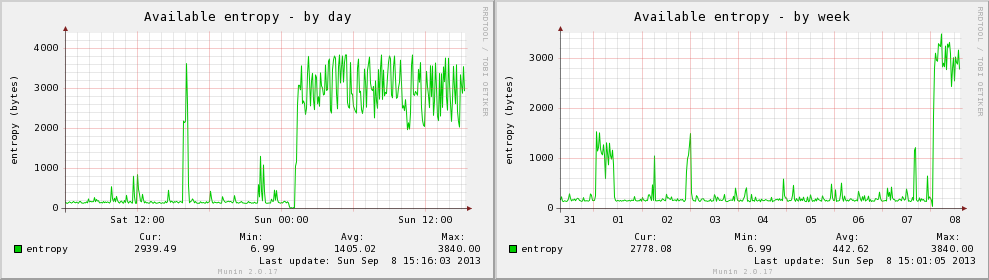

The device ships with an open source daemon that takes random data from the key and injects it into the Linux entropy pool for use by all /dev/random using applications. It instantly makes a huge difference to the available volume by generating almost 3.9KB/s of random data.

Gain entropy with just 1 easy repayment! Call now!

After starting the daemon and re-running the test, the performance looks much better:

# dd if=/dev/random of=/dev/null count=1000

0+1000 records in

145+1 records out

74504 bytes (75 kB) copied, 21.8926 s, 3.4 kB/s

The numbers are still low, but the reality is you generally you only need a few bytes at a time, rather than massive volumes like this test demands – for general signing usage, 3.4kB/s is a huge volume to have.

So whilst this test doesn’t reflect the real way /dev/random is used, it does illustrates the difference in data volume a proper random number generator can make. And whilst this might not be a common problem thanks to the low volume of random data required for most applications to function, the increasing use of virtualisation makes this issue possibly one that people may bump into more in future.

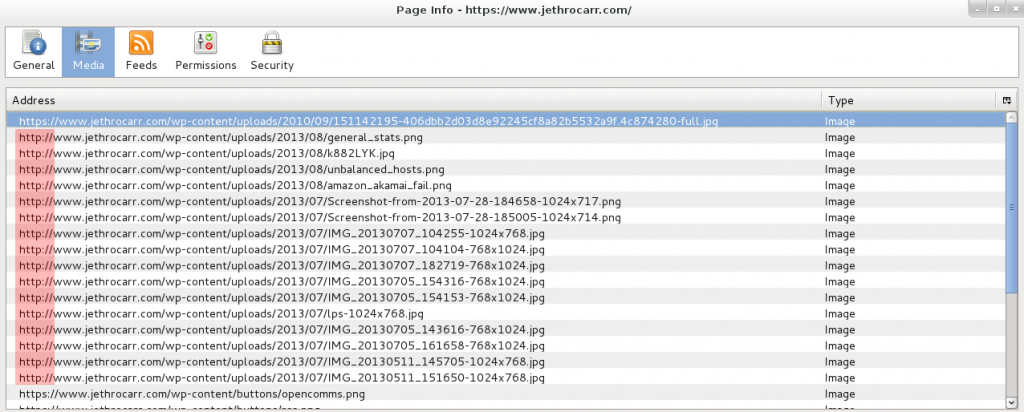

Now that I have my host server getting a reliable and steady flow of random data, my next step is to share that data to the virtual machines running on the host – as I’m doing all my signing in guests, it’s vital that I get that random data through to them,

I’m in the process of investigating a few different options and will cover these in a follow up blog post, as it’s a somewhat sizeable topic in it’s own right.