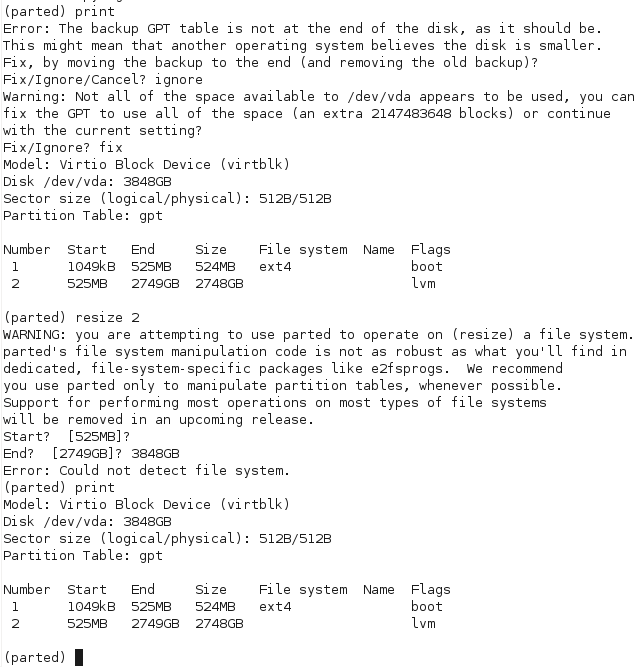

My file server virtual machine passed the 2TB limit a couple months ago, which forced me to get around to upgrading it to RHEL 6 and moving from MSDOS to GPT based partitions, as the MSDOS partitioning table doesn’t support more than 2TB partitions.

I recently had to boost it up by another 1 TB to counter growing disk usage and got stuck trying to resize the physical volume – the trusty old fdisk command doesn’t support GPT partitions, with most documentation resources directing you to use parted instead.

The problem with parted, is that the developers have tried to be clever and made parted filesystem aware, so it will perform filesystem operations as well as block partition operations. Secondly, parted writes changes whilst you’re making them rather than letting you discard or write the final results of your changes to the partition table.

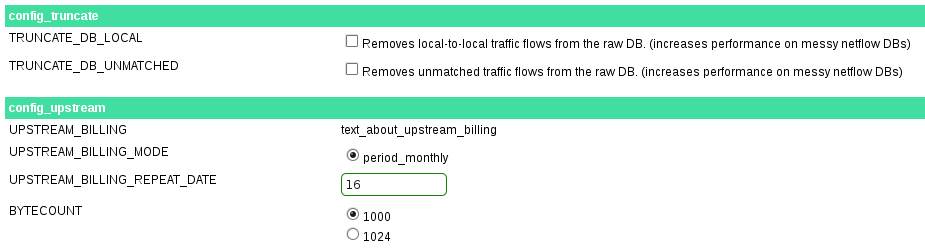

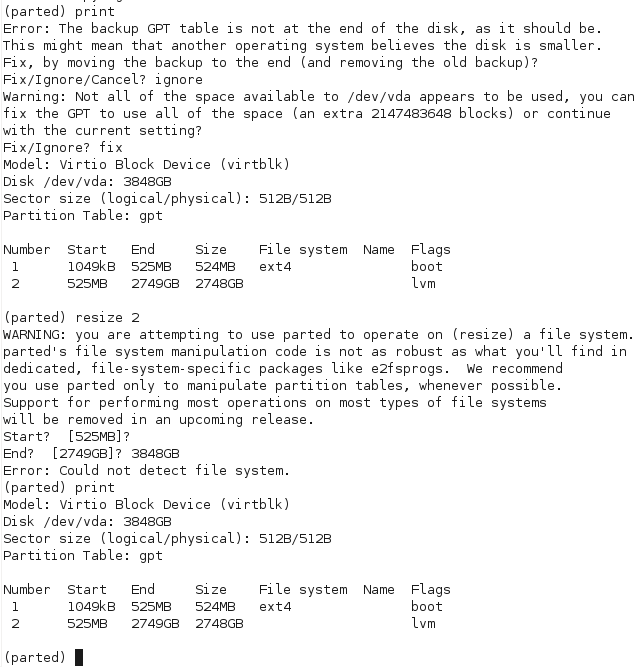

This breaks really badly for my LVM physical volume partitions – as you can see below, parted has a resize command, but when used against an LVM volume it is unable to recognize it as a known type and fails with the very helpful “Error: Could not detect file system“.

Naturally this didn’t put parted into my good books for the evening – doing a search of the documentation didn’t really clarify whether doing the old fdisk way or deleting and re-creating partitions at the same start and end positions was safe or not, but the documentation suggested that this is a destructive process. Seeing as I really didn’t feel like have to pull 2TB of data off backup, I chose caution and decided not to test that poorly documented behavior.

The other suggested option is to just add an additional partition and add it to LVM – whilst there’s no technical reason against this method, it really offended my OCD and the desire to keep my server’s partitioning table simple and logical – I don’t want lots of weirdly sized partitions littering the server from every time I’ve had to upsize the virtual machine!

Whilst cursing parted, I wondered whether there was a tool just like fdisk, but for GPT partition tables. Linux geeks do like to poke fun at fdisk for having a somewhat obscure user interface and basic feature set, but once you learn it, it’s a powerful tool with excellent documentation and it’s simplicity leads it to being able to perform a number of very tricky tasks, as long as the admin knows what they’re doing.

Doing some research lead me to gdisk, which as the name suggests, is a GPT capable clone of fdisk, providing a very similar user interface and functionality.

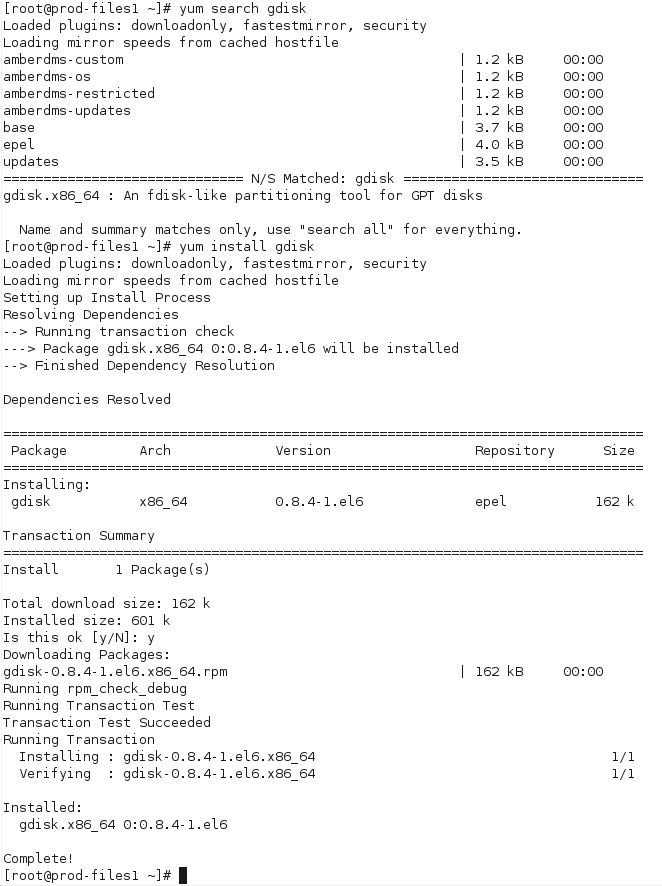

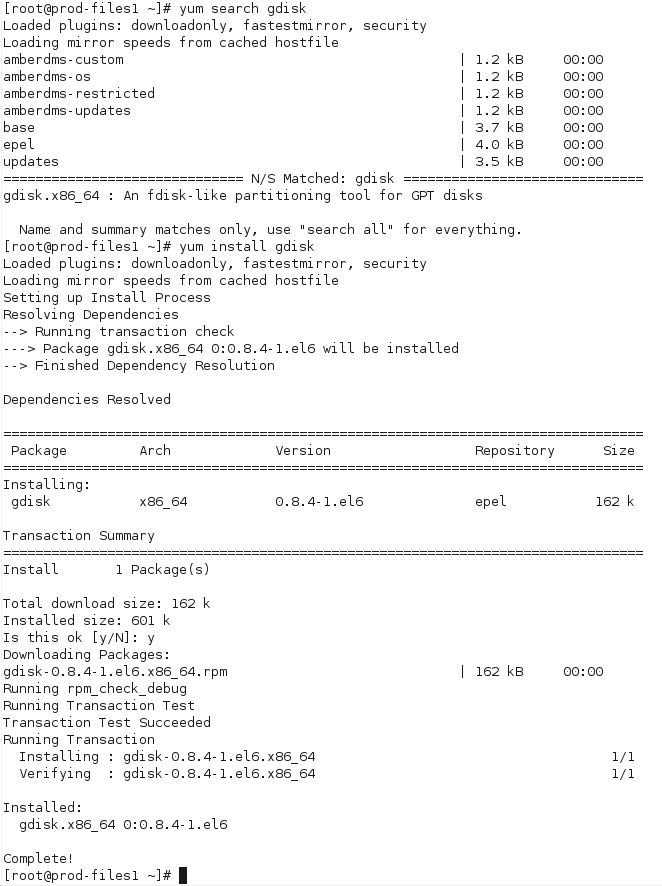

Whilst it’s not part of RHEL’s core package set, it is available in the EPEL repositories, hopefully these are acceptable to your environment:

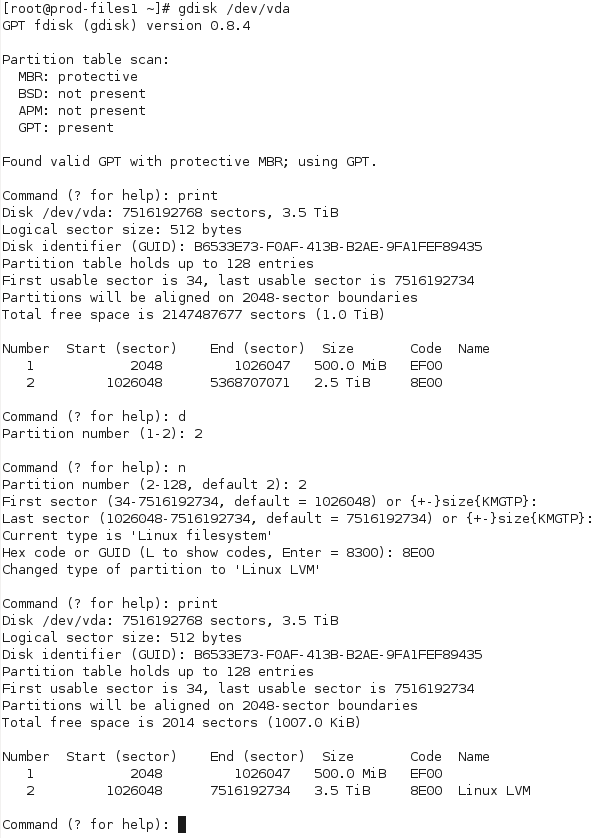

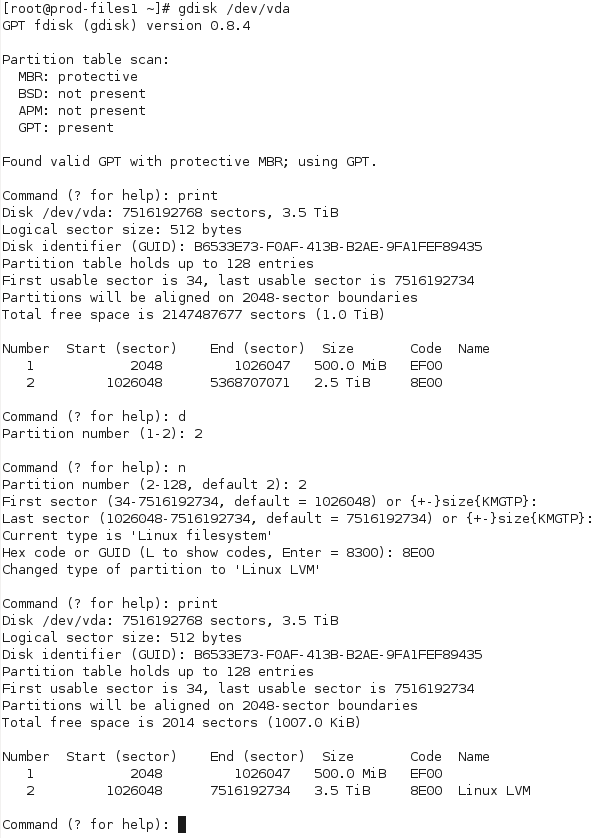

Once installed, it was a pretty simple process of loading gdisk and deleting the partition before expanding to the new size:

Most important is to verify that the start sector hasn’t changed between deleting the old partition and adding the new one – as long as these are the same and the partition is the same size or larger than the old one, everything will be OK.

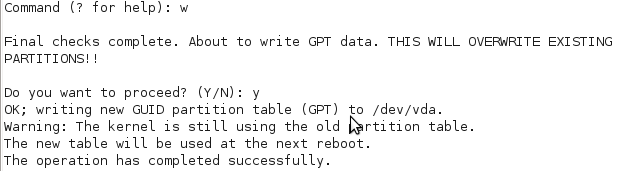

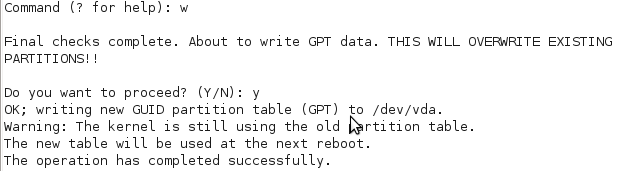

Save and apply the changes:

On my RHEL 6 KVM virtio VM, I wasn’t able to get the OS to recognize the new partition size, even after running partprobe, so I had to reboot the VM.

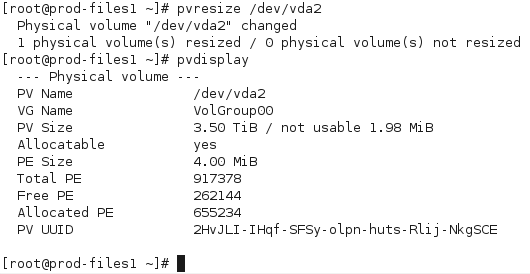

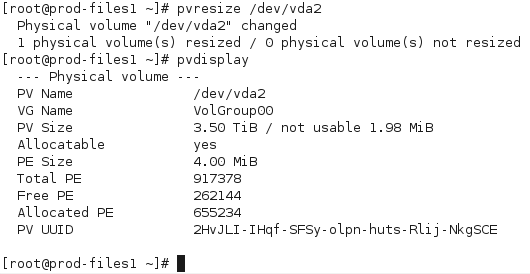

Once rebooted, it was a simple case of issuing pvresize and pvdisplay to confirm the new physical volume size – from there, I can then expand LVM logical volumes as desired.

Note that pvresize is a bit annoying in that it won’t show any unallocated space – what is means by free PE, is free physical extents, disk that the LVM physical volume already occupies but which isn’t allocated to logical volumes yet. Until you run pvresize, you won’t see any change to the size of the volume.

So far gdisk looks pretty good, I suspect it will become a standard on my own Linux servers, but not being in the base RHEL repositories will limit usage a bit on commercial and client systems, which often have very locked down and limited package sets.

The fact that I need a partition table at all with my virtual machines is a bit of a pain, it would be much nicer if I could just turn the whole /dev/vda drive into a LVM physical volume and then boot the VM from an LVM partition inside the volume.

As things currently stand, it’s necessary to have a non-LVM /boot partition, so I have to create one small conventional partition for boot and a second partition consuming all remaining disk for actual data.