Unfortunately with my recent project enabling IPv6 across my entire personal server environment, I’ve bumped into a number of annoying issues – nothing that isn’t fixable, but things that are generally frustrating and which just shouldn’t be an issue.

Particular thanks goes to my many RHEL/CentOS 5 virtual machines, which lack some pretty key stuff such as:

- IPv6 connection tracking preventing the ESTABLISHED,RELATED ip6tables rules from working.

- Unexpected behavior of certain bootscript configuration options.

- Lack of IPv6 support with CIFS (Samba/SMB) share mounting.

- Some weirdness with Dovecot I still need to resolve.

(Personally, based on the number of headaches I’ve found with RHEL 5, my recommendation is accelerate any plans to upgrade to RHEL 6 – or some other distribution – before deploying IPv6 in production.)

At the moment, CIFS IPv6 support on RHEL 5 & 6 has been causing me the most pain. My internal file server is dual stacked and has both A and AAAA DNS records – it’s a stock-standard CentOS 6 box running distribution-shipped Samba packages and works perfectly from the server side and modern IPv6 hosts have no issue mounting the shares via IPv6.

Very typical dual stack configuration:

# host fileserver.example.com

fileserver.example.com has address 192.168.0.10

fileserver.example.com has IPv6 address 2001:0DB8::10

However, when I run the following legitimate and syntactically correct command to mount the CIFS share provided by the Samba server on other RHEL 5 hosts, it breaks with a error message that is typical of incorrect syntax with the mount options:

# mount -t cifs //fileserver.example.com/tmp /mnt/tmpshare -o user=nobody

mount: wrong fs type, bad option, bad superblock on //fileserver.example.com/tmp,

missing codepage or other error

In some cases useful info is found in syslog - try

dmesg | tail or so

Taking a look a the kernel log, it shows a non-descriptive error explanation:

kernel: CIFS VFS: cifs_mount failed w/return code = -22

This isn’t particularly helpful, made more infuriating by the fact that I know the command syntax is correct and should be working perfectly fine.

Seeing as a number of things broke after switching on IPv6 across the entire network, I’ve become even more of a cynical bastard and ran some tests using specifically stated IPv6 and IPv4 addresses in the mount command.

I found that by passing the IPv6 address instead of the DNS name, you can produce the additional error message which offers some additional insight:

kernel: CIFS: ip address too long

Huh. Looks like a text book IPv6 support bug to me. (Even I have made this mistake in some older generation web apps that didn’t foresee long 128-bit addresses).

In testing, I found that the following commands are all acceptable on a dual-stack network with a RHEL 5 host:

# mount -t cifs //192.168.0.10/tmp /mnt/tmpshare -o user=nobody

# mount -t cifs //fileserver.example.com/tmp /mnt/tmpshare -o user=nobody,ip=192.168.0.10

However all ways of specifying IPv6 will fail, as well as pure DNS resolution:

# mount -t cifs //2001:0DB8::10/tmp /mnt/tmpshare -o user=nobody

# mount -t cifs //fileserver.example.com/tmp /mnt/tmpshare -o user=nobody,ip=2001:0DB8::10

# mount -t cifs //fileserver.example.com/tmp /mnt/tmpshare -o user=nobody

No method of connecting via IPv6 would work, leaving stock RHEL 5 hosts only being able to work with CIFS shares via IPv4. :-(

Unfortunately this error is due to a known kernel bug in 2.6.18, which was fixed in 2.6.31, but sadly not backported to RHEL 5’s kernel (as of version 2.6.18-308.8.1.el5 anyway), leaving RHEL 5 users in a position where the stock OS is unable to mount CIFS shares on an IPv6 or dual-stacked network. :-(

The ideal solution would be to patch the kernel to resolve the issue – and in fact if you are running on a native IPv6-only (not dual stacked), it would be the only option to get a working solution.

However, typically if you’re using RHEL, custom kernels aren’t that popular due to the impact they make to supportability/guarantee of the platform by vendor and added headaches of security update tracking and application, so another approach is needed.

The following methods will all work for stock RHEL/Centos 5:

- Use the ip=X mount option to overule DNS.

- Add an entry to /etc/hosts.

- Have a separate DNS entry that only has an A record for your file servers (ie //fileserverv4only.example.com/)

- Disable IPv6 entirely (and suffer the scorn of your cooler IPv6 enabled friends).

These solutions all suck – having manually fixed IPs isn’t great for long term supportability, additional DNS records is an additional pain for management, and let’s not even begin to cover why disabling IPv6 entirely is wrong.

Of course RHEL 5 is a little outdated now, so I took a look at how RHEL 6 fared. On the plus side, it *can* mount IPv6 shares, all of the following mount commands are accepted without fault:

# mount -t cifs //192.168.0.10/tmp /mnt/tmpshare -o user=nobody

# mount -t cifs //2001:0DB8::10/tmp /mnt/tmpshare -o user=nobody

# mount -t cifs //fileserver.example.com/tmp /mnt/tmpshare -o user=nobody,ip=192.168.0.10

# mount -t cifs //fileserver.example.com/tmp /mnt/tmpshare -o user=nobody,ip=2001:0DB8::10

However, any mount of a IPv6 server using the DNS name will still fail, just like how they did with RHEL 5:

# mount -t cifs //fileserver.example.com/tmp /mnt/tmpshare -o user=nobody

The solution is that you need to install the “cifs-utils” package which provides the /sbin/mount.cifs binary offering smarter handling of shares – once installed, all mount command options will work OK on RHEL 6, including the standard DNS-based command we all know and love. :-D

I had always assumed that all Linux systems that could mount CIFS shares had the /sbin/mount.cifs binary installed, but it seems that’s not the case, rather the standard /bin/mount command can handle mounting CIFS using just the standard kernel mount() function

However when /bin/mount detects a /sbin/mount.FILESYSTEM binary, it will call that process instead of calling the kernel mount() directly, these binaries can offer additional logic and handling off the mount command before passing it through to the Linux kernel.

For example, the following strace from a RHEL 5 host shows that /sbin/mount checks for the existence of /sbin/mount.cifs, before then going on to call the Linux kernel mount() directly with the provided arguments:

stat64("/sbin/mount.cifs", 0xbfc9dd20) = -1 ENOENT (No such file or directory)

...

mount("//fileserver.example.com/tmp", "/mnt", "cifs", MS_MGC_VAL, "user=nobody,password=nobody") = -1 EINVAL (Invalid argument)

But a RHEL 6 host with cifs-utils installed provides /sbin/mount.cifs, which appears to do it’s own name resolution, then establishes a connection to both the IPv4 and IPv6 sockets, before deciding which to use and instructs the kernel using the ip=X parameter.

stat64("/sbin/mount.cifs", {st_mode=S_IFREG|0755, st_size=29376, ...}) = 0

clone(Process 1666 attached

...

[pid 1666] mount("//fileserver.example.com/tmp/", ".", "cifs", 0, "ip=2001:0DB8::10",user=nobody,password=nobody) = 0

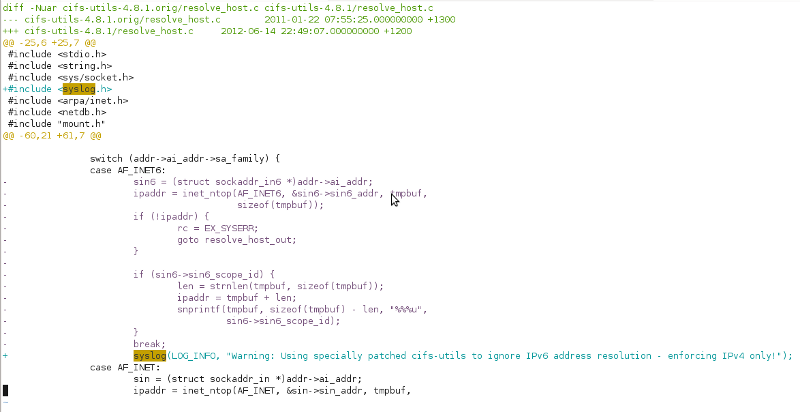

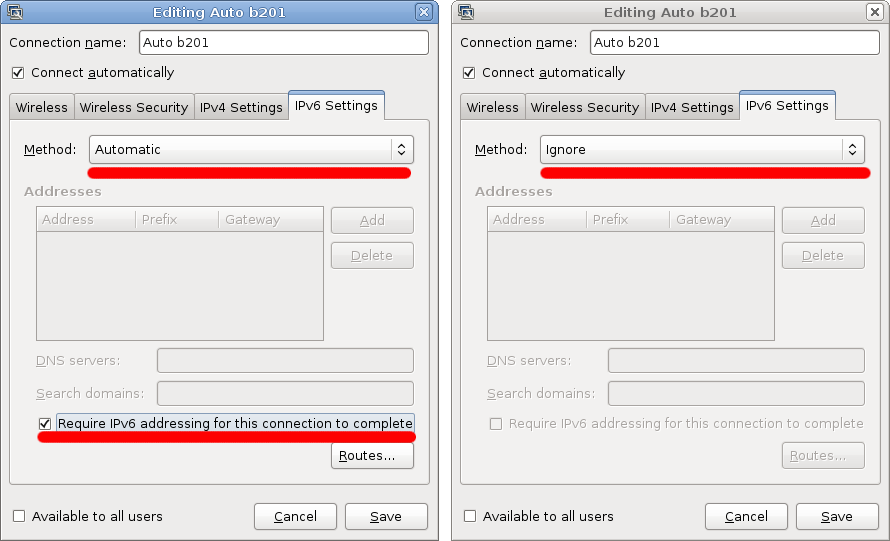

So I had an idea….. what if I could easily modify a version of cifs-utils to run on RHEL 5 dual-stack servers, yet only ever resolve DNS queries to IPv4 addresses to work around the kernel issue? :-D

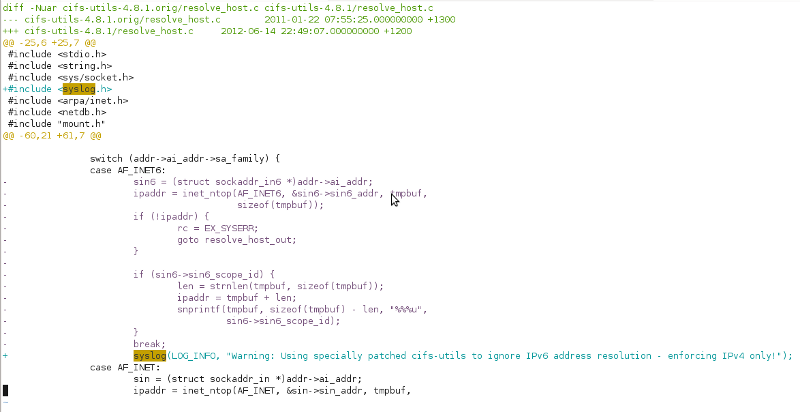

Turns out you can – effectively I just made the nastiest hack ever by just tearing out the IPv6 name resolver. :-/

I’m going to hell for this, but damn, feels good man. ;-)

I wasn’t totally evil, I added an info level syslog notice about the IPv4 enforcement incase any poor admin is ever getting puzzled by someone’s customized RHEL 5 box refusing to connect to CIFS shares IPv6 – that would be a bit too cruel. ;-)

The hack is pretty crude, it actually just breaks the IPv6 socket connection attempt and so it then falls back to IPv4, so it throws up a couple errors in the logs, but doesn’t actually impact the mounting at all.

mount.cifs: Warning: Using specially patched cifs-utils to ignore IPv6 address resolution - enforcing IPv4 only!

kernel: CIFS VFS: Error connecting to socket. Aborting operation

kernel: CIFS VFS: cifs_mount failed w/return code = -111

But wait, there’s more! I have shiny cifs-util i386/x86_64/SRPM packages with this evil hack available for download from amberdms-os repository (or directly from the server here).

Naturally this is a bit of a kludge, don’t trust it for mission critical stuff, you ONLY need it for RHEL 5, not RHEL 6 and I can’t guarantee it won’t eat all your data and bring upon the end times, etc, etc.

I’ve tested it on my devel systems and it seems like the nicest fix – sure it won’t work for any hosts needing to run on native IPv6, but by the time I come to drop IPv4 addressing entirely I certainly will have moved on my last hosts from RHEL 5 to something a bit newer. :-)